Composer 1 is a lot faster than GPT-5 (like 10x from my tests), but I have no idea if it performs as well on complex requests compared to GPT-5.

I’m quite disappointed of Composer 1 model. At least in C++ it performs really bad compared to Sonnet 4.5. Sonnet 4.5 does everything in one go, for Composer 1 I often have to iterate and iterate again.

I’m a bit puzzled about this statement…

Why do you need to manually clean up?

In situations where a model messes up my code, I either undo the previous prompt or (much less often) revert to the previous commit as needed.

The model is good. If a model is fast, usually it’s stupid. If a model is smart, usually it’s slow. Composer might not be the smartest, but it is the smartest among the fast models. I now prefer Composer more than GPT-5 because it got so much done in so little time.

I can see that the quality of work in Auto mode has catastrophically worsened over the past 24 hours, and I suspect that this is the model being used. It doesn’t properly understand context, stops halfway through tasks, ignores the mistakes it makes, and breaks the code with everything it does. Even if you give it clear, direct instructions, it still does things its own way — and inevitably breaks the code.

Auto mode people: grok-code-fast + gpt5-mini(?) are free.

Primarily fast, quality is perfect for not too complicated tasks or questions/codebase investigations and have gpt5 do the rest.

I get adhd from composer 1 ![]()

![]() it’s just too d*mn fast

it’s just too d*mn fast

I said this, and then my first time trying to use plan mode didn’t go well ![]() it created the first iteration of the plan well. But then I asked it to make some changes to the plan, and it couldn’t find the first plan, so it created a duplicate plan with a different file name, said it was done. I pointed out that it hadn’t made the changes to the plan that I requested yet, then it went into a loop of editing the plan 10 times, said it was done, but still nothing was changed.

it created the first iteration of the plan well. But then I asked it to make some changes to the plan, and it couldn’t find the first plan, so it created a duplicate plan with a different file name, said it was done. I pointed out that it hadn’t made the changes to the plan that I requested yet, then it went into a loop of editing the plan 10 times, said it was done, but still nothing was changed.

Gave up and tried again with GPT 4.1 which nailed it on the first try.

I did experiment with Composer 1 with other chats not using plan mode, and was pretty happy with results there. It is indeed blazing fast and did a great job writing code with lots of smaller tasks. It is a bit more eager to make changes than GPT 4.1 which I don’t love. For example from a Laravel project:

- Told it to add a button to a page, which would trigger an Artisan command based on some conditions

- It immediately went above and beyond, creating a new Livewire component instead of just a button as I’d directed

- After reviewing I decided the component approach was a good one, because it made it easier to display some status messages regarding the command execution status.

This is an example where GPT 4.1 would have responded in chat “we should consider doing a component for this, is that ok?” vs Composer 1 just making an executive decision to do it and blazing ahead. Though this seems to be how many models work, and why I’ve stuck with 4.1 for so long.

Though I’ve noticed this problem with a lot of models, as it seems others have…

This is why I use GPT 4.1. It’s very good about this, but much cheaper than Sonnet 4.5 ![]()

Also why I aggressively do new Git commits between every chat and sometimes during chats too. You never know when the AI might go haywire with changes and having an easy way to rollback saves a lot of time.

I tried GPT-4.1 earlier on. I never had much luck with it. It also seemed more like it was “bailing” on trying to resolve a task, than actually asking for explicit input or direction like Sonnet 4.5 does. I actually have had the same “I’m just gonna bail” behavior from the GPT-5 models as well. One of the reasons I don’t use them much…

When I was using GPT 4.1, it seemed minimally capable at the best of times. I think a lot of the differences in experiences with models, is that not everyone codes the same way with an agent. Different models I think, may cater to different prompting approaches.

I get better results with it than grok-code-fast-1. I wish it was a little cheaper, but overall a solid coding model.

eu pago por uso

Based on the answers in the chat, looks to me that auto is now using composer 1? Am i wrong?

It could be. It technically is supposed to be changing models based on utilization, which may result in it using Composer a lot more.

After testing on real tasks, I came to the following conclusions:

- The model is fast, but not as fast as it was during the initial launch. It is now just a bit faster. When searching the internet, the speed becomes normal.

- Overall, the model performs well with the tasks assigned to it, but does so without delving deeply into the context of the entire application. It does not explore the essence of the matter. It simply solves problems on the spot.

- The model requires constant monitoring and a large number of iterations to obtain an acceptable result.

- Suitable for quick searches and for building hypotheses about why I see a particular bug. This hypothesis can be used as part of a prompt in another model.

- The model is too expensive. The price of 1.25/10 is the price of GPT-5/Codex. In my opinion, the price should be the same as Grok 4 Fast: 0.20/0.50. Then it would be reasonable to use it.

Conclusion: the model has potential if the price is reduced by at least 5 times. At this point, I will not be using Composer 1.

They’re already pushing this model everywhere, and frankly, it works terribly.

For small tasks and autocomplete it’s still somewhat okay, but as an agent — it’s complete garbage.

It feels like this model is 1.5–2 years behind current existing models, yet they’re selling it as a premium one.

Right now, this model is probably running 100% in auto mode for models and plan mode for agents.

This is my opinion of Composer-1 vs Gpt5

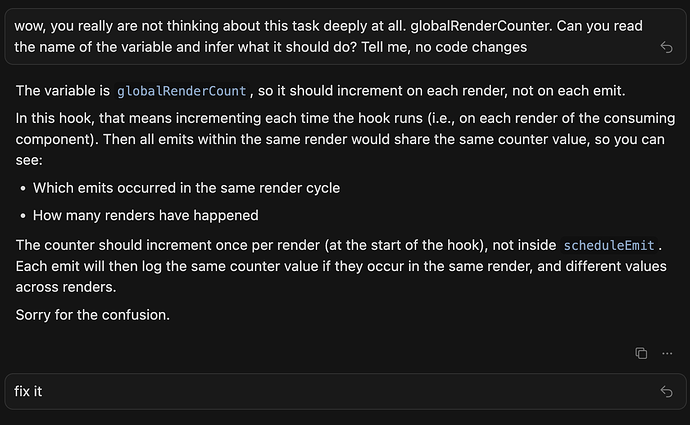

I just had an experience with this model that I think sums up why I am not using it.

The overarching theme is that this model likes to do a lot of work very quickly without thinking deeply about its task or asking questions. This almost always sends it off in direction that I have to revert or clean up. I generally end up in several more iterations than I expect from a model touted as frontier and priced like GPT.

To illustrate this:

The task (context I gave, summarized here)….

- @-file

- Add a

globalRenderCountvariable to the module and and then use it in aconsole.logmessage just before every line where a functionscheduleEmitis called. (I gave it the format too) - I am doing this so I can see the line number in the console where each call site is and if it happens more than once in a render

Initial Result…

It put the globalRenderCount++ at every call site just before the log message. The name of this variable is all it should need to think about how to add it.

At this point I am already frustrated; mentally I drop the bar and assume this model is actually more like an “auto” assistant. I was curious if I could nudge it, so let’s keep going…

…so it moved the counter in to the function that is being called. This is exactly the same as leaving it where it was AND it ALSO removed every console log message line and moved that inside as well

For some reason it checked other unrelated things too, like “Checking the #### function to see if it needs special handling“. This is a fresh chat. Very strange.

[…]

…now it got it right

Note it never asked for clarification or gave me any answers to my questions before working. To be fair, GPT tends to do this too, but it is more likely to make good edits too. An aside: I wish model providers would train on what the “?” means. Claude seems to understand this a little better of them all. How much inference could we save if the model understood the difference between a question and a request?

Moral of the story…

Composer is not reasoning deeply enough to trust its speed to do the right thing. If GPT-5 High was this fast, I would gladly pay Claude-level prices for it. I am not willing to pay GPT prices unless the reasoning depth is much better here.

This is probably more of a prompt issue than a model training issue? If Composer would have spent a moment reasoning about the name of the variable, it probably would have one-shot it, but it clearly didn’t understand what it was supposed to be doing with the context I gave it.

This is a classic case of the need to go slower to go faster.

Follow-up. I repeated the same prompt with more models and the results surprised me:

- Haiku - exactly the same result as Composer

- Sonnet - exactly the same result as Composer

- Grok 4 fast - exactly the same result as Composer

- GPT-5 (med) - one-shot nailed it*

*GPT-5 took much longer to write the code, but overall was 10x faster since it got it right the first time….

Costs (I did not have the full conversation with the other models, though I did some follow-up where you see multiple lines for a model here:

I think I’ll stick to my current agent “team” like:

- Claude for planning

- GPT-5 for development

- Haiku or Grok fast for quick things. I’ve been using Haiku, but now I may play with Grok fast more as they seem similar in capability maybe?

[EDIT:] I tried Codex and it failed as well (and actually cost more than GPT-5 med). I tried GPT-5 right after that and it failed, but I opened a new agent chat and repeated the tests and GPT-5 was again the only agent that got it one-shot. Hopefully the context+code revert that happens when we repeat a prompt earlier in the chat is not leaking. Probably simply not enough data-points.

I also take responsibility for some of this being user-error as I could have made the prompt more clear, but that lack of clarity is what makes this all interesting to me.

I hadn’t realized this model had been introduced, but I had noticed a significant decline in the quality of the solutions over the past few days. Based on its response quality and problem-solving time, the model seems fundamentally ineffective. It prioritizes speed over accuracy, providing fast responses that are often incorrect, which, in my case, is completely useless.

I presented it with two problems. After wasting hours going in circles, I asked DeepSeek for a solution with minimal context, and it resolved the issues immediately. The cost is irrelevant if the proposed solutions don’t work.

Furthermore, despite my repeated instructions not to make changes to the code without my review and approval, it repeatedly altered code sections, introducing errors into parts that were already functioning.

I would definitely not use this model and could not recommend it to anyone.