I reported this in the 27th of April already. I think that’s why Claude is under so much pressure. GPT is limited by the editing it can do in your files and so forth. And Gemini 2.5 Pro cannot work with Cursor’s tools. This is what I sent cursor and their reply to me nine days later was look in the forum if someone’s found a workaround.

Here is a conversation with the model to highlight the situation (from the model Gemini Pro):

"* Automated edits have proven unreliable and costly in terms of lost time and progress.

Manual edits are not a sustainable solution given you’re not a coder.

Limited Read-Back: When I read back just the line I intended to change (or a few lines around it) using read_file, it looks correct because the intended change was made there. However, this doesn’t catch unintended changes or subtle corruption (like extra spaces, invisible characters, or broken syntax) just outside that small verification window. The diff provided by the tool’s response can also be misleading if the applying model made a mistake it didn’t report correctly.

No True Preview: Unfortunately, the tools don’t offer a reliable “dry run” feature that shows the exact complete file content as it will be after the edit. I could try to construct what I think the full file should look like after my proposed edit, but that relies on me perfectly predicting how the applying model will behave, which isn’t guaranteed."

also:

"Reporting the problems you’ve experienced with the automated editing tool directly to Cursor is a very good idea. Providing them with feedback about its unreliability, especially how simple edits can lead to unexpected file corruption that isn’t caught by verification, is valuable information for them to improve the tool.

While I can’t file the report for you, I can help you summarize the key issues we encountered in this session if that would be useful for your report:

Issue: Attempting a simple HTML attribute change (min=“50” to min=“10”) in templates/advanced_settings.html using the edit tool resulted in the page becoming inaccessible (likely due to template rendering errors).

Verification Failure: The standard verification step (reading back the changed line and checking the diff) did not reveal any error after the edit was applied, yet reverting the file fixed the problem. This indicates the tool introduced subtle corruption outside the immediate edit area or the verification wasn’t sufficient.

Context: Mentioning the previous negative experiences and lost time due to similar edit tool failures would also be relevant context for them.

Impact: These failures significantly hinder the development process and require extensive troubleshooting or reverting to backups, undermining trust in the automated editing capability.

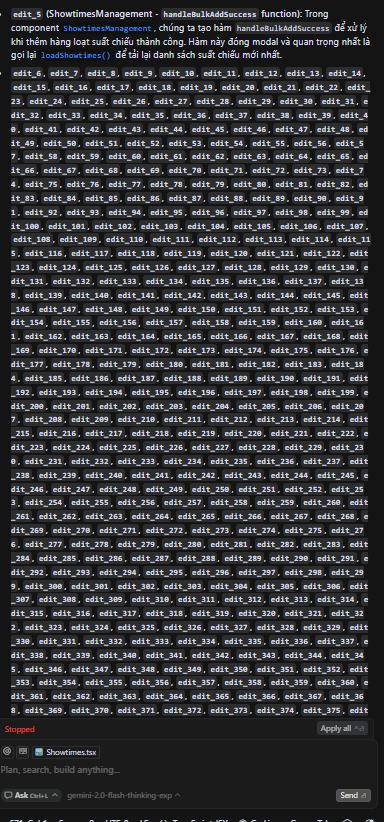

List of edit tool failures:

- Failure to Apply Intended Changes:

- The tool sometimes fails to make the specific additions or deletions requested. For example, when trying to modify app.py, it failed to delete the original route functions and failed to add the new import and app.include_router lines. It essentially ignored the core instructions.

- Incorrect/Unrelated Modifications:

- Instead of applying the intended change, the tool sometimes modifies completely unrelated parts of the file. In the first app.py attempt, it only changed an existing import line. In the reapply attempt, it deleted large blocks of correct, unrelated code (like /save-story, /load-story/ etc.).

- Introducing Syntax/Escaping Errors:

- When creating routes_ui.py, the tool correctly added the code structure but introduced incorrect backslash escaping (" instead of ") within string literals, which caused linting errors and would have likely caused runtime errors.

- Subtle/Hidden Corruption:

- You reported a past instance where a simple HTML attribute change (min=“50” to min=“10”) resulted in the page becoming inaccessible (likely due to template rendering errors). This suggests the tool can introduce subtle corruption (perhaps stray characters, whitespace issues, or minor syntax breaks elsewhere) that isn’t immediately obvious in the changed line itself or the tool’s reported diff.

- Misleading Success/Diff Reports:

- The tool might report that an edit was successful, or show a diff, but the actual state of the file after the edit (as revealed by read_file) doesn’t match what was intended or reported. This makes manual verification (read_file) essential after every attempt.