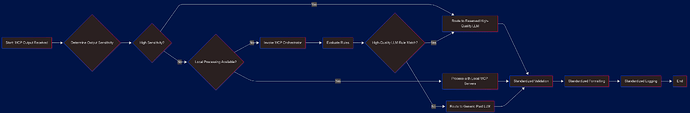

I would like to contribute a bit to this topic of implementing software development workflow in terms of MCPs. Apart from MCP orchestration in practice one also needs to orchestrate LLMs they communicate to. Sending all results from MCPs to a paid generic LLM is an overkill. As soon as we split the work to specific workers aka MCPs we want to assign specific LLMs to each step as well. Not unique - it can be 2 or 3 local LLMs which are good at dealing with massive context in requests for free and single external paid LLM as the last defence. As far as I see, Cursor does not support assignment of LLMs to agents, unlike Theia. This can make whole workflow on production scale quite expensive for nothing.

You can always use an intermediary MCP that acts as manager and forwards the requests internally to other LLMs then passes the final result to Agent.

Ideally for complex workflows you would need a proper workflow setup which exists in many frameworks and programming languages as packages/extensions that can handle the whole management / communication.

@normalnormie You’re absolutely right about the +10% improvement from using Personas . Narrowing context to align with specific expertise reduces ambiguity and improves model outputs. The absence of an MCP in frameworks like CrewAI or NPCSH highlights a gap in their design. An intermediary MCP could act as a bridge here, managing task-specific workflows and ensuring outputs are properly contextualized. This is something worth exploring further, especially for scaling such systems.

@resosiloris If you have any specific ideas or use cases you’d like to contribute, feel free to share—we’re always open to collaboration.

@nick2 Sending all MCP outputs to a generic paid LLM is indeed inefficient and costly. that through, due the reasoning sequential servers and MCP that are used are open sourced and nobody has to pay for them for we are relying on external servers to do the work while at the same time we can work it out locally.

- We can reserve output models that are quality to get this result: Final validation or high-stakes outputs where precision is critical.

As for what I’m able to understand we would need a MCP orchestrator based on predefined rules which we are basing theusage and requirement of python inside the rules, that will be pushed soon with the Jira implementation as @atalas mentioned above: as we move forward there’s a potential workaround that could involve building a lightweight orchestration layer outside Cursor to manage the models through MCP assignments explicitly if you could please pull request the new changes with the newest implementation I can merge them with main branch.

@condor It would act like a traffic controller then if that’s the case the main routing tasks for the LLMs have to be internally and then consolidating the final output for the Agent and since Python it’s going to be implemented, we can have a better output with asyncio which makes scaling these workflows much more sustainable in the long run.

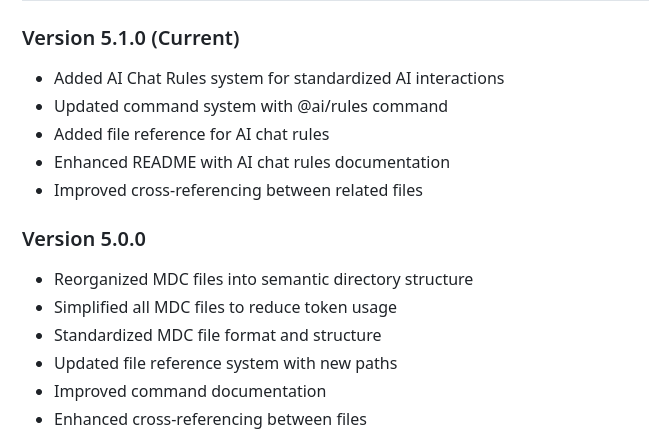

New push will be made on GIthub in the upcoming hours we are pushing the Jira as-well and a full structured organization on the rules. @atalas the working and length on multiple .mdc files I’m working on them right now and making the new push more organized and more structured.

We are live now with the new update.

This thread made me feel really stupid, and im really fn smart. but also /edibles

Youre fun ![]()

haha, come on we all have something in mind to share.

Sending you massive hug.

Latest update:

Working Reference:

Reworking the whole rules to be more comprehensive and simple.

Expect the unexpected.

@deanrie How are you? Could you kindly lock this thread, as I will no longer be maintaining it and have transitioned to a newer, more focused guide?

Thank you.

Updated Thread: