I’m thinking of adding a document with recommendations on prompting to Agent Compass. I’ll take your comments into account. Thanks for the feedback!

I haven’t been working with the code much for the last couple of weeks, but I have a feeling that using Auto and GPT-5-High is enough for now. Although it is still worth testing Claude-4 vs GPT-5 as QA engineers.

The GPT-5-High is slightly cheaper than the O3 and about on par with the Gemini 2.5 Pro, but it solves problems better. Grok 4 is still broken and slower than GPT-5. It will be interesting to see the upcoming Gemini 3.0 Pro.

I’ll also try to finish Agent Enforcer and rewrite Agent Docstrings before the free OpenAI promo runs out. But I’m not so sure about Agent Viewport anymore, since Figma rolled out their own LLM wrapper (which I haven’t tried myself), and back in spring its absence was a real pain for me.

Would there be any aspects of your guide you’d change since auto mode is moving to usage based?

■■■■, did they secretly change the pricing again for everyone who doesn’t stalk their socials and website 24/7?

Alright, fine, that’s only gonna be from September 15, so MAYBE they’ll be able to notify everyone through the IDE in advance.

I really should update the guide with GPT-5 in mind, but I haven’t been doing much coding these past few weeks…

hi @Artemonim any specific changes you would recommend for GPT-5 in guides or just adding the model?

Well, I’ve basically switched over to GPT-5 now.

Feels like Gemini is still better when it comes to architecture and documentation.

And I haven’t had the test how good GPT-5 is at debugging compared to Claude 4 Sonnet.

I checked the upcoming pricing for Auto — it looks like instead of Auto you’ll just be able to use GPT-5 Mini. But Auto had one of Claude inside and it was a pretty nice alternative, because it approached tasks a bit differently.

My earlier recommendation about o3-Pro is probably outdated. As for Opus, I’m not sure, but from what I’ve heard it’s not really that much better than GPT-5 to justify the higher price.

Too bad Grok 4 is still broken in Cursor, and even when it’s not broken, it’s just way slower than GPT-5.

And it would also be worth testing the GPT-5 Mini vs o4-mini, since they have a similar cost.

I really want the GPT-5 flex mode, because I have more time than money. ![]()

And it would also be interesting to look at Grok 4 Heavy in the IDE.

By the way, I still don’t understand how to choose between normal, High and Low.

It’s the same feeling of working with a slot machine as when I was learning how to work with txt2img StableDiffusion.

GPT-5 has Normal/Low/High as reasoning efforts.

- In Low it uses less reasoning tokens and is better if AI does not need to come up with its own solution.

- High uses much more reasoning tokens and works best when the solution is not self evident but enough information is provided so AI can reason through the steps.

-low would likely save some tokens compared to regular GPT-5.

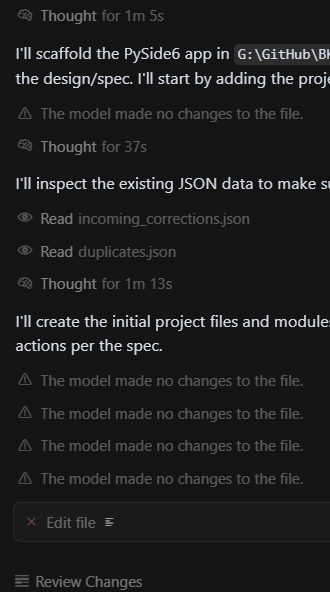

GPT-5 in multi-workspace workspace ![]()

Gemini is now working correctly in the same chat with the same start prompt. I sent the feedback via dislike.

Gemini is surprisingly great. Finally uses tools without problems and blossoms to its full potential!

First time trying Opus-4.1-Thinking on Android Kotlin problem that Gemini and Grok 4 can’t solve fast.

It didn’t solve it either, but it burned $5 in 5 minutes. I thought about letting it burn at least $10 dollars, but it started bypassing the instructions.