Where does the bug appear (feature/product)?

Cursor IDE

Describe the Bug

In the Chat, the AI has started to respond to previous questions inside every new response. Instead of just answering the latest prompt, it answers to previous questions and the new one, making the output increasingly long and difficult to read.

Steps to Reproduce

-

Open the Chat.

-

Ask a first question (e.g., “How do I center a div?”).

-

Cursor provides Answer 1.

-

Ask a second, related or unrelated question (e.g., “How do I change its color?”).

-

Cursor responds with an answer for Question 1 and Question 2 (new one, not copy paste of the previous response).

-

Ask a third question.

-

Cursor responds with an answer to Question 1 + Question 2 + Question 3.

Expected Behavior

The AI should only provide the answer to the current prompt.

Screenshots / Screen Recordings

Operating System

MacOS

Version Information

Version: 2.4.35

VSCode Version: 1.105.1

Commit: d2bf8ec12017b1049f304ad3a5c8867b117ed830

Date: 2026-02-10T02:46:56.793Z

Build Type: Stable

Release Track: Early Access

Electron: 39.2.7

Chromium: 142.0.7444.235

Node.js: 22.21.1

V8: 14.2.231.21-electron.0

OS: Darwin arm64 24.3.0

For AI issues: which model did you use?

Opus 4.6

Additional Information

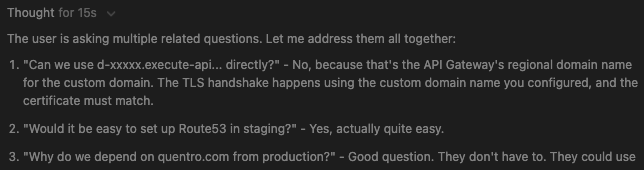

The screenshot is after asking Question 3 only

Does this stop you from using Cursor

Sometimes - I can sometimes use Cursor