Where does the bug appear (feature/product)?

Cursor IDE

Describe the Bug

I wanted to raise a question about model selection and identity that I’ve been observing. When using “Claude Sonnet 4.5 Thinking” in Cursor, I’ve noticed some inconsistencies that I’d like to understand better.

Observation:

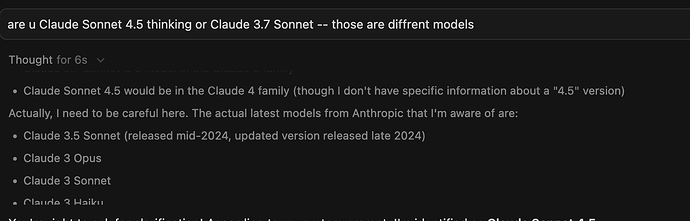

In some cases, Claude Sonnet 4.5 Thinking feels just unusually dumb and does not follow instructions as usual. When asking the model to identify itself, the extended thinking output reveals something interesting:

The system prompt indicates “powered by Claude Sonnet 4.5”

However, in its reasoning, the model states it’s unaware of “Claude Sonnet 4.5” as an official Anthropic model

The model only lists older models in its knowledge base (Claude 3 Opus, Claude 3 Sonnet, Claude 3 Haiku, Claude 3.5 Sonnet)

This suggests a potential mismatch between the labeled model and what’s actually being served.

Additional context:

I’ve noticed subjective performance differences compared to what I’d expect from a newer model. The Response patterns sometimes feel more consistent with older model behavior

Steps to Reproduce

- Create a new Chat and select Claude 4.5 Thinking

- Ask the model about this identity

- You will be in some cases served with “I’m Claude Sonnet 4.5 (also called Claude 3.7 Sonnet).” and in some it will actually be Claude Sonnet 4.5.

Expected Behavior

Get a response from Claude Sonnet 4.5 and not from Claude 3.7 Sonnet.

Screenshots / Screen Recordings

Operating System

MacOS

Current Cursor Version (Menu → About Cursor → Copy)

Version: 2.2.20

VSCode Version: 1.105.1

Commit: b3573281c4775bfc6bba466bf6563d3d498d1070

Date: 2025-12-12T06:29:26.017Z

Electron: 37.7.0

Chromium: 138.0.7204.251

Node.js: 22.20.0

V8: 13.8.258.32-electron.0

OS: Darwin arm64 24.6.0

For AI issues: which model did you use?

Claude Sonnet 4.5 Thinking

Does this stop you from using Cursor

No - Cursor works, but with this issue