Been using GPT-5 mostly since it was released. I use Sonnet (thinking and non-thinking) and other models for some things, but most of it has been GPT-5 lately. Mostly trying to get a good feel for it, and there are some things it seems to have an advantage at.

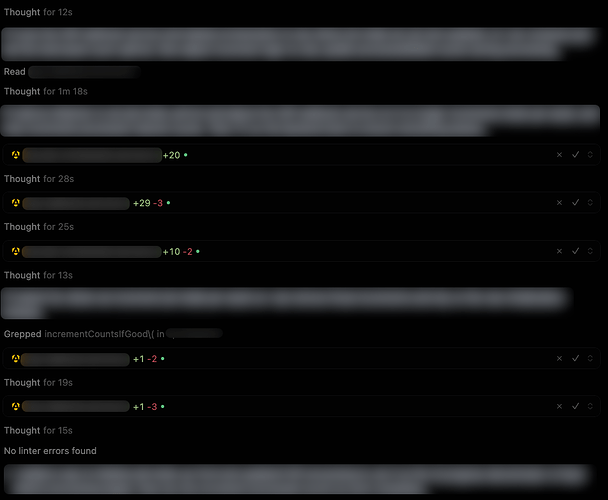

However, thus far, my overarching feel about GPT-5, is it is WASTING TIME!! Its thinking cycles, seem to average around 30 seconds. They generally range from about 18 seconds to over 60 seconds. In response to a single prompt in the agent chat, each one lasting maybe 8-10 minutes (lately…seems things have become rather slow lately, and I’m not sure its limited to GPT-5), there will be 3-5 minutes of time lost due to GPT-5’s excessively long "thinking” cycles.

This is no joke. This is a LOT of time. I literally just sat through a 1m4s single thinking cycle!! Looking at what it thought about, I honestly cannot fathom WHY it thought so long…it was relatively primitive “ok, so I’m going to be working on unit tests….which files…ah, these files, same files I’ve been working with all along, ok so what do I do, oh, right…I’m writing unit tests!” Reads some files. Then it thinks again, 42 seconds, same BS. Reads a few more files. Thinks again, 37 seconds. WHAT THE ACTUAL FRICKIN HECK?!?!

This is starting to feel like a LEGIT SCAM. This is pointless, useless, utterly wasteful, of my time, my money, and the sole purpose, its starting to seem, is to line the pockets of OpenAI execs? Because this thinking is mundane, trivial, and primitive. It is needless and useless, and seems to offer no fundamental benefit to the BASIC task of writing unit testing code (soemthing, it seems, just about any model can do, and do well, without any thinking capabilities at all…heck, generating massive amounts of unit test code was the first thing I started doing with GitHub CoPilot years ago back when it was just tab completions!)

This issue comes to a head here, after I spent a day troubleshooting a bunch of broken code GPT-5 produced yesterday morning and the day before. Code that builds, but had plenty of runtime issues. Turned out, it was really crappy code! I even spent about a half hour DEBATING the nature of TypeScript assignment restrictions with the stupid thing. I’ve been programming typescript for over a decade! I’ve lived and breathed it nearly every day for 3600 days! Not only was GPT-5 dead wrong, it was demonstrably wrong, when I literally would edit the code myself, fix it how I was instructing the model to fix it (to which it would NOT, especially after wasting 40-60 seconds “thinking” about it!), without any build or runtime errors. So not only is the model wasting my time with its useless thinking cycles, but its even DEBATING me on what it thinks are the fundamentally necessary reasons why it had to do what it was doing.

In the end, what it was doing, or trying to do, was bypass the very fundamental reason why anyone would choose to use TypeScript: for the type checks! But GPT? Ah, nah, who needs type checks when you can just ( as any).do.whatever.the.f.you.want()!?!?!?

There is something very wrong with GPT-5 thinking. More and more it seems to be pointless waste, and is otherwise not offering any kind of actual benefit to the process. In fact, after researching thinking models recently, especially coming across an article from Anthropic about how prolonged thinking cycles actually degrade the results, increase hallucination, etc. (now it makes sense why their cycles are around 1-5 seconds on average, and rarely top 11 seconds in my case!), I am starting to think that GPT-5’s thinking, is actually to my detriment.

I am EAGERLY awaiting non-thinking versions of GPT-5. Especially if they come at say a 0.5x cost rate vs GPT-5 ![]() , and especially if WITHOUT thinking, they actually write good code!

, and especially if WITHOUT thinking, they actually write good code!

In the mean time, I think its back to Claude. My experiment with GPT-5 has shown its not as solid a model as it seems, or was hyped to be, not at least, once you get into the weeds of what it is actually doing. Its doing some pretty bad stuff in those weeds!