Hey.

Question is

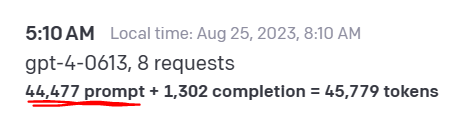

What Happens when the input is more than the 8k tokens? (either the code is too large or the chat history has got too long)

what if the code i’m mentioning with @ + the chat history gets more than that !?

Does it send only the last part to openai? a summary of everything? will it do multiple calls? does it turn the history to embedding?

what will happen exactly?

I can say cursor has special approach for that, and doesn’t really have a limitation for the tokens, because it uses multiple OpenAI models (Embeddings one of them):

1 Like

I think we generally do a good job of keeping almost everything needed in context. We’re going to be pushing some updates that provide much more visibility into what actually goes into the context as well.

@truell20 can probably give more visibility on what happens now for long files or long conversation histories

4 Likes

awesome. yeah i would love to hear that @truell20