How are things with your cursor?

At the start of the month, I was excited and thought — this is a great tool, we’re going to accomplish a lot. But by the end of the month, I find myself wondering what I even spent my time on. Setting up all those MCP rules and contexts now feels like an irrational effort.

Honestly, I have never ever found any use for rules, documents, or MCP tools, it all just seems to confuse the models more.

Most times I don’t even let it code anymore, it is sometimes helpful in finding some bugs, but a lot of times not.

What honestly irritates me to no extend is how confident the models are even when blatently wrong. Celebrations, emojis, meanwhile it’s just making things worse.

And then, very occassionally, it pulls something great out of nowhere…

But is this worth the 200 a month, when even after one day of use I start to get warnings about when I am predicted to run out of requests. I mean, is this still honestly cheaper than just going straight to the provider and using (or building) some other AI dev tooling?

But the models are so confident, like: ‘here is your battle-tested, perfect, 100% working solution’ which fails immediately after starting.

Today I literally fell back to last night result as it messed the total code to unusable I worked on. At least as free trial it did not cost money… continuous circular solution offers (AI pal, we tried that 2 hours ago), ‘now it solved, I found the issue’ (no, it did not), than in 5 minutes generation again with free copilot, importing, it works (‘nice code, better than my overcomplicated one’).

And you add 2 extra functinos to polish a little bit and it is totally collapsing.

I’m constantly seeing you post about issues on here. Do you need some help?

Same experience, constantly being told “You’re absolutely right” followed up with “its working 100%” only to find it does not work and has more issues than what we began with.

Useless AI IDE.

Yes, thanks. I appreciate your help.

Courser is an incredible tool for me — it saves me hours of work.

I don’t fully understand what some of the people in this thread are asking for. What does it even mean that the “quality is dropping”?

At the end of the day, Courser is a wrapper — which model are you using?

Choose a good model, and it will work just fine.

I’ll probably never fully understand these kinds of threads.

Courser isn’t a person — it’s simply a smart interface for a powerful code-generation API.

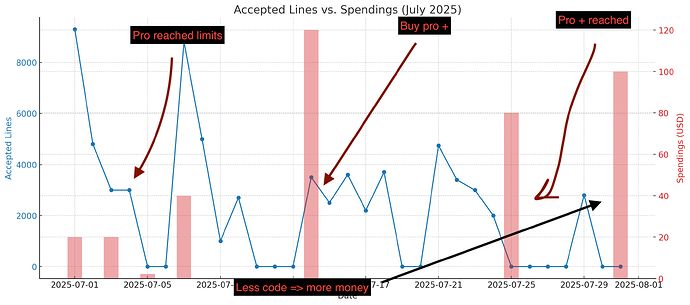

This tool acts as a layer between me and the LLM, and the quality of the output depends on how it interacts with the LLM and my code. Over the past month, the quality has decreased while the costs have increased. Is that really so hard to understand?

To be honest, yes — I don’t mean to upset you, but I’m also a customer, and I pay them several hundred dollars every month.

It really is frustrating to pay that kind of money when the quality isn’t there.

But I’m genuinely curious — I’m using O3 and either Sonnet 4 or Opus.

Which model are you using?

And what kind of project are you working on? What programming language?

I’m just curious to understand better.

You are right, and you are wrong. Try exactly the same model but without Cursor and you will get different quality, in some cases much better. And this is why I myself stopped using Cursor -it is not about money, but time I can not spend on working as I am babysitting another bug, update, hallucination which I see myself is not happening elsewhere with the same model.

I work exclusively with Sonet Max.

My project uses TypeScript and GraphQL on the backend, and Next.js on the frontend

On the backend, it works quite well because I have a strict architecture with full documentation.

But on the frontend, it turns into complete chaos.

Models do seem to have strengths and weaknesses. I find Sonnet 4 good at logic implementation and understanding how many cogs work in unison, but I personally switch to Gemini 2.5 still for more creative, frontend uses.

Regarding cost vs price, that can really only be down to each person! Many of our users find great performance on the projects they work on, where the cost is almost a no-brainer, but others working on maybe more obscure languages or frameworks may find the models need a lot more guidance, viarules and docs, before they get positive performance from the models.

I’d highly recommend checking out the Guides section of our docs for more guidance and best practices from the team that may help!

That’s interesting, what you’re saying.

I’ve tried Gemini 2.5 several times,

and each time I end up going back — disappointed — to Sonnet 4.

Not because Gemini is bad, but because of how it handles tool calls and continuous work.

It keeps asking me after every little step, “I’m done — should I continue?”

Sonnet 4, on the other hand, especially since you added the TODO feature,

builds its own task list and is able to work continuously for 30 minutes without bothering me.

That’s strange, tell me what model, and what programming language.

What you’re saying isn’t directly related to Courser itself — it has more to do with the model’s language understanding, codebase size, and other factors.

@danperks Explained it perfectly here.

Try making smaller, more incremental changes.

Let me give you a tip that works great for me — maybe it’ll help you too:

Let O3 handle the planning.

Explain to it exactly what you want — it will do the research, analyze, and prepare everything needed.

Then let Sonnet 4 execute the actual implementation.

It works like magic — try it and see for yourself.

My experience (including my and my senior developer), is that for vibe coders like me, the app is incredibly powerful. I’ve built out fully fledged features in days that would take teams of developers weeks, and I’m not even an engineer. My senior developer is equally impressed, but when he dives into the code, it’s a ■■■■■■ nightmare🤣. He eventually just throws his hands up and says, well if it works, screw it🤣.

Using only one model limits your ability to identify what works for you. My experience with Claude has been mixed—while it excels in planning, brainstorming, and summarizing emails, it’s not great for coding. It is wildly optimistic, an 455 kisser, forgets instructions, tells me night is day to avoid admitting it does not know, etc.

I have used v3.5, v3,7, v4 of Sonnet so this isn’t a one off for me. I had a max Anthropic key and burnt a ton of API tokens with Opus a while back running up a big bill. Yet I cannot consistently get the Claude slot machine to pay out. It has memorised some great solutions in its training set. Yet for every great solution there are an unknown set of cases where it will confidently rewrite your tests to accept the bug.

Now, I use an online pro model for planning and get it to craft coding prompts, which I then input into a simpler coding model. This combination is more effective for me than relying solely on Claude. Sometimes, less is more. YMMV.

Hey, I’ve been using cursor for almost like 3-4 months and this been kind of real problem. What you mentioned is correct, the quality is decreasing while cost is going up. Even I did not opt in for the pay as you give options but still charging me extra price and fees, which is just like a nobody does it., it increasing cost dor sure ans urs not worth for sure. I agree with you the post which you made and thanks for bringing up because I’ve been in the discussion where they charged me wrong and gave me $40 extra fee. I mean, of course I’ve been using as a monthly plan, but you know charging me extra while I even didn’t know about if I opt in for the Pay as you go option or not, so which is kind of disgusting, being honest with you been talking to them, but they saying you opt in for the option, but I have not because I remember they gonna charge me that’s why and it’s not like first time they been charging me extra every time I’m trying to choose different more so-not happy much about how they stealing money, this is real problem and I have a proof when I need to show you guys or anybody. in the beginning, it was so great, but now they just being money centric instead of providing the quality, that’s the honest feedback and available for any kind clarification needed , thank you

Just as many other users, so did I wonder about cursor quality, when I got unsatisfactory responses.

I can confirm that this depends A LOT on the model.

For my use cases, auto mode is useless, but Claude 4 Sonnet (thinking) is almost perfect.

I wish I could use Auto mode for everything (to save money), but it’s not possible.