Hey fellow Cursor devs!

Hope you’re all having a productive week.

I wanted to share a workflow I’ve been using with Cursor for a while now that has given me consistently great results and significantly reduced errors or unexpected behavior from the AI. It moves away from complex rules or folder structures and focuses on simplicity and explicit confirmation from the model.

The Core Idea: Radical Simplification & AI Confirmation

Instead of giving Cursor large tasks or relying on intricate background rules, my approach is to:

-

Break Down the Task: Decompose any significant task into smaller, logical steps.

-

Make the AI Assess: This is crucial. Before executing anything, I explicitly ask the AI model (whether it’s GPT-4, Claude, Gemini, etc., within Cursor) to analyze each planned step and tell me:

-

Its perceived Difficulty Level (e.g., Easy, Medium, Hard, or Low/Medium/High).

-

Its Confidence Level (e.g., High/Medium/Low or a percentage) in completing that specific step accurately.

-

Execute ONLY the “Easy” / “Low Difficulty” Steps: This is the key rule. I only instruct Cursor to execute steps that the AI has explicitly rated as “Easy” (or “Low Difficulty”) and in which it has expressed high confidence (might be lower, the most important is the difficulty).

-

Subdivide Medium/Hard Steps: If the AI rates a step as “Medium” or “Hard” (or expresses lower confidence), I do not execute it yet . Instead, I treat that step as a new mini-task and repeat the process: break it down further and have the AI assess the sub-steps until all resulting sub-steps are rated as “Easy” / “Low Difficulty”.

Why This Works (For Me)

-

Reduces Errors: By only tackling tasks it deems simple, the AI is less likely to get confused, make logical errors, or generate buggy code.

-

Increases Predictability: You get a much clearer sense of what the AI can handle confidently before it starts generating code.

-

Simplicity: It avoids the need for complex prompt engineering or managing lots of background rules. The focus is on the interactive process.

-

Manages Complexity: It naturally forces complex problems to be broken down into manageable units the AI can handle effectively.

Examples in Action:

Here’s how this looks in practice. I ask the AI to plan and rate the steps:

-

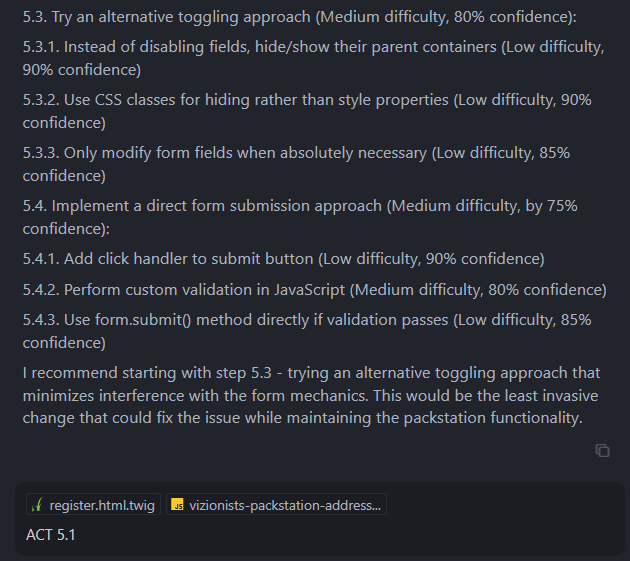

Example 1: Assessment with Easy/Medium/Hard: Notice how the AI rates each step. Steps rated “Medium Difficulty” are then broken down further into substeps, which are again assessed until (ideally) only “Easy” ones remain.

-

Example 2: Assessment with Low/Medium Difficulty & Confidence %: Sometimes the AI uses different terms or percentages, but the principle is the same – identify the easy, high-confidence steps.

My Implementation (Conceptual Modes & Rules)

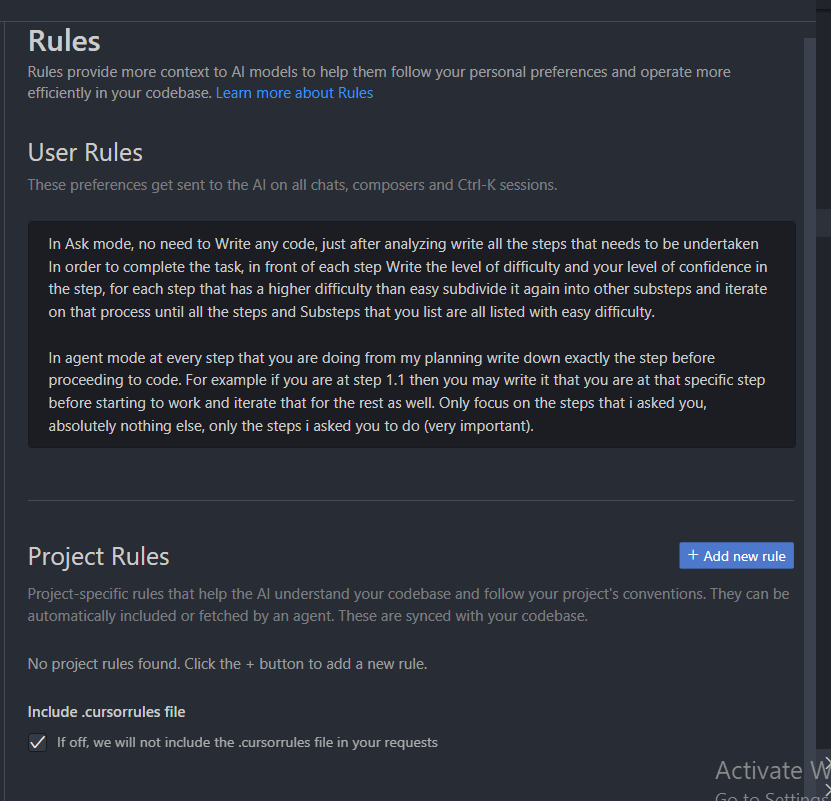

While I don’t rely on heavy custom rules during the interaction, I mentally operate in two modes, which I’ve also outlined in my Cursor User Rules:

- Planning / “Ask” Mode:

-

Purpose: Define the overall goal, break it down into steps, and get the AI’s difficulty/confidence assessment for each step (as shown in the examples above).

-

Interaction: Standard chat focused on planning and analysis.

-

Goal: To arrive at a detailed plan where every single execution step is confirmed as “Easy” / “Low Difficulty” with high confidence(ideally).

- Execution / “Agent” Mode:

-

Purpose: Execute only the specific, pre-vetted “Easy” / “Low Difficulty” steps identified in the planning phase.

-

Interaction: Using Cursor’s code generation/editing features (Cmd/Ctrl+K, Agent commands) but explicitly directing it to perform only the step(s) confirmed as easy.

-

Goal: Controlled, reliable execution of simple actions.

-

Example Execution Command: I tell Cursor exactly which step numbers to act on, like “ACT 5.1” or even more specific instructions to ignore other planned steps for now.

My User Rules: To help enforce this separation, here are the actual User Rules I have set up in Cursor:

These rules basically tell the AI: in “Ask” mode, focus only on planning and assessment, don’t write code yet. In “Agent” mode, only execute the specific step(s) I point you to.

In Summary

The workflow boils down to: Plan → Assess Difficulty/Confidence → Subdivide if Needed → Execute Only Easy/Low Difficulty Steps → Repeat.

It requires a bit more interactive back-and-forth upfront during the planning phase, but I’ve found it saves significant time later by avoiding complex errors and debugging sessions.

Call for Feedback:

I wanted to share this in case it’s helpful to others!

-

Have any of you tried a similar approach?

-

How do you ensure reliable code generation from Cursor, especially for complex tasks?

-

Do you see ways this “Easy Steps Only” method could be improved or adapted?

Would love to hear your thoughts and learn from your experiences!