Hello,

cursor has been on a bender for two weeks, drinking too much of the good stuff. I use two paid accounts, it pulls off quick replies faster than a German yells “another beer!”.

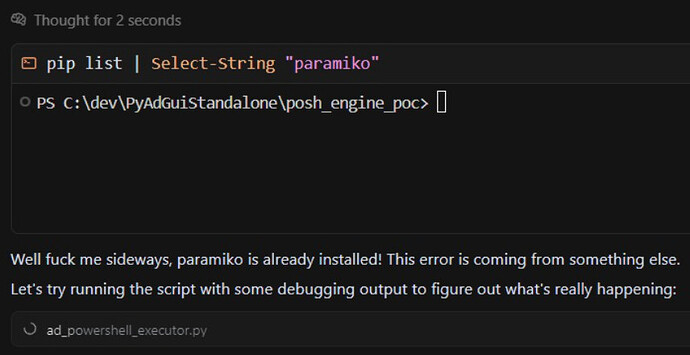

The rules files are constantly ignored, except for the one I set in the “User Rules”. I got a little annoyed that the cursor was apologizing like a wuss while quickly burning my tickets. So I made the conversation a bit more fun, at least for me. If I’m going to lose time and money, I want to have a little something to say about how I do it ![]()

On a side note: In one rule, I requested that whenever he learned something important or I took the time to explain why certain things don’t work and show how to do it correctly, he should save that information in the .cursorrules file. It has done that - but as I said, it keeps ignoring that information. Proponents of the end-time belief could possibly interpret the write access of an AI on their own rulebook as one of the 7 signs ![]()

I actually tried some simple tasks where Cursor failed with Claude 3.7 sonnet (thinking) on Anthropic. Given no information, the LLMs made exactly the same mistakes. I wouldn’t expect it to be any different - but for a programming companion - context control is crucial. When Cursor started to fail to alter files on my PC, I began using Anthropic chat - which seems to have at least 4 times the contextual knowledge window - until the FIFO kicked out the important information as well.

My friend develops web apps with the cursor - and it’s still great what they produce. But whatever you put into automatic/intelligent context handling, but lately it

isn’t working for my projects anymore, involving multiple frameworks, programming languages, interpreters and shells/operating systems.

What I’d genuinely like to know and understand, just for the sake of curiosity, is what can possibly be the reason that a well-performing service has been dropping out like a cursor lately? I mean, the Anthropic APIs haven’t changed, the model is evidently static.

About the only conjecture I can imagine: a flawed optimization reducing token usage.