@jpedrorw what do you mean specifically? We show the pricing as per API cost and the model tokens as provided by Anthropic.

Just saw Sonnet 4.5 think for 4.5 minutes–on the same prompt that GPT-5-high did 4 minutes and 2 seconds. Very interesting–i’ve never seen Sonnet thinking models “think” like this…

you typed it wrong. sonnet

where are they?

I just want to understand how prices are used within Cursor..

how this usage would cost $ 321 ? I mean…

![]()

- Anthropic model pricing is here: Pricing | Claude

- They also have a table version which breaks down pricing by model and cache interaction.

I’d assume Cursor uses the 5-minute cache TTL, but I don’t know that for sure. Can you post your full cost breakdown? I looked through mine, and it seems very reasonable:

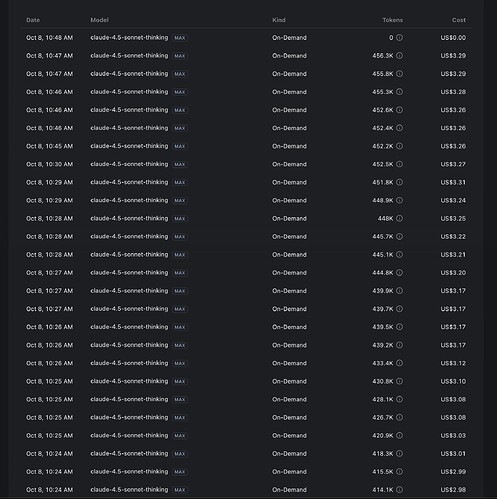

For reference, here it is with the claude-4.5-sonnet-thinking token breakdown:

wtf

these prices seems off compared to claude’s official api prices.

cursor mysterious usage within “MAX” and double prices of context (who knows?)

serious dev big projects lol

In Claude Code this model performs excellently - in Cursor it does well too but im sorry not at this price point.

I did some investigating, and the prices line up well to me.

Regular pricing (less than 200k context):

- Cache read: $0.30 per million tokens

- Cache write (assuming 5 minute TTL): $3.75 per million tokens

- Raw input: $3.00 per million tokens

- Raw output: $15.00 per million tokens

Then we have the “extended pricing”. My understanding is that when a message is sent with 200k or more total tokens, ALL tokens in that prompt are priced at the extended rate (2x the regular rate for input, and 1.5x the rate for output). In other words, the pricing doesn’t use a bracketing system. I could be wrong about this. Please correct me if my understanding of the pricing is wrong.

That means the extended pricing is like this:

- Cache read: $0.60/Mtok

- Cache write: $7.50/Mtok

- Raw input: $6.00/Mtok

- Raw output: $22.50/Mtok

I used this to estimate the cost for my 4.5-sonnet-thinking usage:

def cost(price_dollars_per_mil, used_token_count):

return price_dollars_per_mil * (used_token_count / 1_000_000)

# This is my cost, assuming I didn't use the extended

# context window.

my_cost = (

cost(0.3, 9_984_192) # Cache read

+ cost(3.75, 2_205_087) # Cache write

+ cost(3, 426_607) # Raw input

+ cost(15, 147_826) # Raw output

)

print(my_cost) # $14.76

This lines up - my actual usage was $15.12, but I know for sure I sent a few requests in MAX mode using over 200k context (i.e. higher costs).

I did the same calculation for you:

# This would be your cost, assuming you didn't use the extended

# context window at all.

your_cost_without_extended = (

cost(0.3, 236_128_856) # Cache read

+ cost(3.75, 16_819_680) # Cache write

+ cost(3, 5_743_956) # Raw input

+ cost(15, 1_043_174) # Raw output

)

print(your_cost_without_extended) # $166.79

~$167 would be way too low from the usage you showed, so I tried a second calculation assuming you used MAX mode the entire time with over 200k context:

# This assumes you used the extended (>200k context) window for every request.

# Notice the price increase for each calculation.

your_cost_with_extended = (

cost(0.6, 236_128_856) # Cache read

+ cost(7.5, 16_819_680) # Cache write

+ cost(6, 5_743_956) # Raw input

+ cost(22.5, 1_043_174) # Raw output

)

print(your_cost_with_extended) # $325.76

This is very close to your actual $321.48 usage. I assume my estimate is slightly higher because your real usage includes some requests under 200k tokens.

@jpedrorw If I had to guess, you probably stay in MAX mode and reuse the same chats for many, many messages. How often do you start new chats or compress the existing context?