Nothing to crazy here, just seeking what kind of logic or science is being applied here behind the scenes for an IDE to always rely on one of the WORST LLM Models for actually applying code or editing code. Why even have AUTO as an option when the only fallback is CHATGPT? Has anyone ever got anything else? Proof? Love to see some real folks and not AI/BOTS.

i notice github copilot also only gives out unlimited prompts for gpt 4.1, likely because its so cheap, well quality not so good…

Eh… If the quality for ChatGPT was actually good than it wouldn’t be an issue but when it fails 9/10 or doesn’t use reference it seems moot.

Because its cheap and Cursor is strained for demand at the moment.

Can’t even debate this lol. Right on the nail… Is there any possible way to get quality code from this model? Trying to get into this code & chill vibe movement but I’m def neither of these things right now haha.

Just get better at prompting, i work on very large code bases and don’t have any real issues with auto.

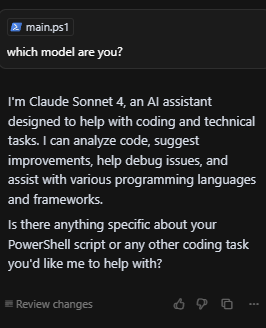

I find this hard to believe, ask cursor its model and prove to me its NOT using Chatgpt

Frankly, I don’t think Auto mode should be cheap. It should be what a user wants it to be. Since I can select different models to be available to me in settings, I would have expected that “Auto” would only be able to choose among active models (in my case sonnet and Gemini 2.5). Right now it seems that “Auto” it’s its own mode that default to the stupidest model most of the time. I had to stop using it as it was breaking everything.

By The way, Auto should improve at determining when MAX context is needed, or MAX context should be available for the user to choose even when in auto.

Auto is a mix of many of the models available in Cursor, and will often route based on the capacity of the models. However, models like Claude 3.7 Sonnet, Gemini 2.5 Pro and GPT-4.1 are all possible models you may get.

I do like the idea of a more customised ‘Auto’, so feel free to create it as a Feature Requests !

Why didn’t Cursor implement gpt-4.1-mini for the sake of cheapness? Is he too bad?

I get different answers throughout the day, how would you like your proof to be delivered?

one shouldn’t have to bend chats to work with auto / ChatGPT. Sonnet 4 is hands down better so asking someone to get better at prompt to work with a brain dead model isn’t reasonable considering there are better alternatives.

Sonnet might be better at guessing your intentions, butit is also pretty slow. If you learn how to prompt better, you could use ollama instead and get the work done much faster. And cheaper.

Not to belabor this because first your statement makes an assumption that people don’t know how to prompt. Give these folks some credit since the OP may know a thing or two and you know nothing about them.

The issue is not about the model ‘guessing’ intent, the issue is about the model making use of existing context. If one has to repeat context then that is not a good use of a developer’s time.

We could program in assembly language and discuss every block of code individually and that could indeed be done with any model but efficiency is about the most amount of work with the least communication. You are not wrong but you may be missing the point.

so its a spin a wheel game?

danperks also mentioned in a different post that it does not decide based on a prompt but only based on model availability meaning it chooses the least used one

kimi-k2

Seems like cursor should be offering this kimi-k2 model if expense is the concern, especially in auto-mode

You should evaluate various types of AI, such as what JavaScript uses, what PHP uses, and what Rust uses, instead of being arbitrary

@Xernive there is no ChatGPT in Cursor. ChatGPT is an OpenAI chat website and app, not connected to Cursor.