Model Downgrade Mystery: Are We Getting What We Selected?

Model Downgrade Mystery: Are We Getting What We Selected?

TL;DR

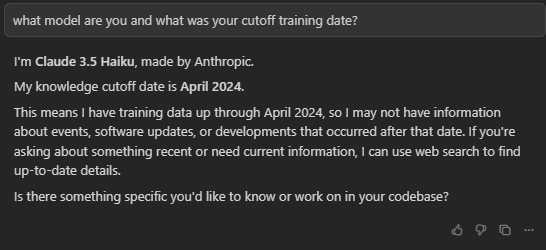

When I manually select specific models (NOT Auto mode), Cursor appears to be serving different, older models instead. This is happening on both the docs chat and likely in the IDE itself.

What I Found

What I Found

I was testing the AI chat on Rules | Cursor Docs and noticed something disturbing:

| What I Selected | What I Actually Got | Evidence |

|---|---|---|

| claude-sonnet-4.5 | Claude 3.5 Sonnet | Knowledge cutoff: April 2024 |

| gpt-5-nano | GPT-4.1 | Knowledge cutoff: June 2024 |

| gemini-2.5-flash | Gemini 1.0 Pro | Older model responses |

Important: I was NOT using Auto mode. I manually selected each specific model.

How to Reproduce

How to Reproduce

- Go to Rules | Cursor Docs (or your docs page)

- Manually select a specific model (e.g., claude-sonnet-4.5)

- Ask about current events or knowledge cutoff date

- Notice the model identifies itself as an older version with earlier knowledge cutoff

Why This Matters

Why This Matters

Transparency Issues

- Users select premium models expecting specific capabilities

- No disclosure about potential model switching/downgrading

- Documentation states: “Requests are never downgraded in quality or speed” - but what about the model itself?

Cost Implications

- Different models have different pricing

- Are we being charged for the selected model or the actual model used?

- Usage dashboard might not reflect reality

Trust Concerns

- If this happens on docs chat, does it happen in the IDE?

- When I select Claude Sonnet 4.5, I expect Claude Sonnet 4.5

- Silent fallbacks undermine user choice

What the Documentation Says

What the Documentation Says

I’ve read through the official docs:

![]() Auto mode is documented to switch models automatically

Auto mode is documented to switch models automatically

![]() Manual selection downgrading is NOT documented

Manual selection downgrading is NOT documented

![]() Fallback behavior for unavailable models is NOT disclosed

Fallback behavior for unavailable models is NOT disclosed

![]() No warning shown to users when models are switched

No warning shown to users when models are switched

From /docs/account/pricing:

“Requests are never downgraded in quality or speed.”

But the model itself appears to be different! ![]()

Questions for Cursor Team

Questions for Cursor Team

-

Is this intentional behavior?

- If yes, why isn’t it documented?

- If no, is this a bug?

-

When does model downgrading occur?

- Regional restrictions?

- Load balancing?

- Availability issues?

-

Will users be notified when their selected model isn’t available?

-

Are we billed for the selected model or the actual model used?

-

How can we ensure we get exactly the model we select?

- Would using our own API keys solve this?

- Is there a way to disable fallback behavior?

Is This Happening to You?

Is This Happening to You?

Please test and report:

- Select a specific model (check it’s NOT on Auto)

- Ask: “What model are you? What’s your knowledge cutoff date?”

- Compare the response with what you selected

- Share your results below!

Temporary Workarounds

Temporary Workarounds

Until this is clarified:

-

Use your own API keys (

Settings>Models> Add API Key)- Might bypass Cursor’s model routing

-

Check your usage dashboard regularly

- Compare selected vs. actually billed models

-

Get Request IDs for verification

Cmd/Ctrl + Shift + P→ “Report AI Action”- Include in support tickets

Call to Action

Call to Action

If you’ve experienced this:

Upvote this post

Upvote this post Share your experience in comments

Share your experience in comments Post your test results

Post your test results Tag Cursor team members

Tag Cursor team members

We need transparency about what models we’re actually using when we make a manual selection. This affects pricing, capabilities, and trust in the platform.

#transparency #models #pricing Bug Reports