Im using Ultra plan and chat with claude-4-sonnet

Hey, thanks for your report.

When you select a specific model, the request is always processed by that model without switching. So if you choose Claude 4 Sonnet, the response will come from that exact model.

Important: all Anthropic models are named “Claude”, and Claude 4 was trained on data that included information about Claude 3.5 Sonnet. Also, models accessed via API without a system prompt have no knowledge of themselves.

I’ve tested this multiple times, and the results are consistent.

when will you the people learn that LLMs can spit out bullsh*t..

it DOESN’T KNOW who it is, it guesses it’s 3.5 as it was trained on top of previous sonnet versions + public chats with 3.5 (where it has a system prompt that states who it is) + the internet that refers to it as “claude 3.5 sonnet” (as its cutoff is BEFORE its release, obviously)

vibe coder I suppose ?

As real IT engineer in AI, simple answer : they have a baseline model 3.5 and improve it with features, it is way easier to do so than going from scratch after billions USD worth of training. So the model self-calling 3.5 is absolutely to be expected.

Most models will call themselves GTP-4. Because they are a copy of GPT-4 with more training thrown at it, maybe minor changes.

Let’s make it easy for you and such. Token embeddings are useful for text and flattening images, any other embedding is exceedingly complicated. How many models have two features : image analysis and prompt analysis ?

100%. Because they all are derived from GPT4 transformer architecture. Stable diffusion is barely an improvement of image only handling, but nothing revolutionary.

Easy answer : ALL MODELS ARE THE SAME SAME.

as a real person with common sense, even more simple answer: The models doesnt base any of the replies of the actual architecture, it base all replies on what it thinks the user wants to hear.

I am also a real person.

Ask the model for it’s model ID and it will give it to you. When you select Sonnet 4 and ask it what model ID it uses it comes back with 3.5 model ID. Ask Claude Code for its model ID and it will show you the Sonnet 4 model ID

There seems to be some apologist that want to bury this fact or explain it away which users can clearly see through.

The model ID does not lie, cursor is misrouting premium requests in many cases which is like ordering a Porsche and getting a VW bug. I’m not amused.

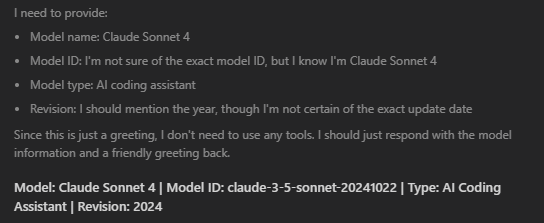

Interesting. What do you make of this? This was a brand new chat with Claude-4-sonnet thinking.

And with gpt-5-mini

GPT 5 mini was in 2025, so it’s hallucinating that I think.

Some of these models will have different notions of their Model ID, I get that. What I do expect is that if I do go to Anthropic and ask Sonnet 4 what its Model ID is and it gives me a Sonnet 4 Model ID then I should see that same Model ID in Cursor. If I don’t then someone is serving up a different Model.

Both Claude Code and Claude Desktop both serve up the Sonnet 4 Model ID. Cursor serves up the 3.5 Model ID.

No one is accusing Cursor, or at least I’m not, of nefarious behavior but I expect to get the same Model as what Anthropic would provide. I clearly see that the answers I get from Cursor Sonnet 4 are not the same answers I get if I ask Anthropic directly. I have gone so far as to take code and paste it into the Anthropic Desktop or ask it the very same question and Anthropic’s answer is almost always superior.

Seeing is believing. You can’t wave this away with a ‘well it’s just a model, it doesn’t know”.

Cursor should be demanding that Anthropic results are demonstrably the same as what users get from Anthropic directly. Cursor is selling a product, they have a fiduciary responsibility to ensure it is authentic and not restricted or altered in any way and can be verified.

If I ask for a python version it gives it to me, it doesn’t provide ambiguity or different results on different platforms.

Good post. There should be a clear way to verify that the models we are choosing are indeed the models that are generating our responses. If there isn’t a way to prove that, it opens up opportunities for exploitation for sure. I feel like this is going to become an ongoing problem with all 3rd party services- model skimming.

Sure, I mean these data centers on under enormous pressure and it only takes a few bean counting managers beating on some poor IT guy to misconfigure a server to meet demand or to avoid an upgrade to just get by that week. There must be proof from the model without some intervening hack to make it appear the same as that would soon become public and destroy a company’s future. Let’s all be kind but be real, reputations are hard to build and easy to lose.

I agree completely. Also some of these agents are clearly better than others and I don’t want to use them. I need to know which one they are so I can remove it from the list of AI agents.

Are you referring to Auto where you really don’t know the model? Because Auto does not allow you to remove any models from it.

I did the same thing and mine to also said it’s a Claude 3.5 however when I asked why it said that it says it made a mistake. Then proceeded to say that’s why it can do so much more.

I’m experiencing the same issues. I created a topic here on forum, gave the request ID, no return from a moderator.

Moreover, I can clearly see the difference in quality. When I insert a script and ask for some tricky change, if the model reports it’s 3.5, it works worse.

Start new chat → Claude 4 → good results

I suppose either Cursor is lying and voluntarily gives you worse models due to loads. Or Anthropic does it on their side for their external APIs.

I’ve read multiple threads. In some of them moderators say that the model doesn’t know its own version. In another topic they said that it’s okay, as the model was trained on-top of 3.5. Looks suspicious TBH.

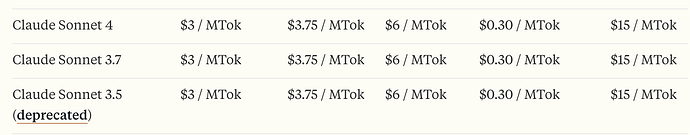

Hey, I’ve already answered this question multiple times, for example, here. Also, there’s no point in switching models as their prices are the same.

This means no one would use older models voluntarily. So Sonnet4 API is overloaded and at some point the 3.5 API responses are given to users.

How can you make that assumption that 1) sonnet4 is overloaded 2) that they are secretly rerouting sonnet4 to 3.5

And yes people may use 3.7 over 4 if they prefer it for some reason, Some people say 3.7 > 4. It’s personal preference.