I was under the impression that the composer uses the .cursorrules file. Its becoming more apparent lately, that it does not.

Same here. Hilarious

Hi @ianjh

It’s strange, but everything’s working fine for me. Could you check? Here are my rules for AI: Start each response with “Yes, sir.”

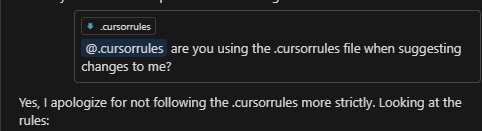

Here’s a screenshot of the response:

Could you also let me know which version of Cursor you’re using?

Hi, I’ve just upgraded so whatever the version was previous to 0.42.4

I’ll try adding the Yes Sir into the cursorrules file and see how we get on.

I’ve had a nightmare with composer lately, but i’ll save that for another thread.

Thanks for your response.

Hi, I added this statement to the cursrorules file:

Start each of your responses with: Yes, sir.

I’ve tested it by a) adding the cursorrules file into the comopser and b) without adding the file into composer and it never mentions ‘Yes, sir’

Do I need to reword the request differently, maybe?

Using v0.42.4

Hey, you don’t need to add the .cursorrules file to the context, it should work without it. Try not adding the file and ask a question in Composer.

cursor rules don’t work for me either. is there a particular format for writing them?

.cursorrules definitely work for me. Before I started using it, Composer was starting absolutely blank, with no understanding about the project whatsoever. Now I feel immediately that it does, so that would be .cursorrules in action.

Check the naming of the file. It should reside in your document root. “Cursor Settings > Rules for AI > Include .cursorrules file” should be enabled.

Do you use a numbered list? do you wrap them in double quotes? is there any specific format for writing the rules? i have the .cursorfiles enabled and all, but it doesn’t seem to be following my rules. so i’m wondering if there’s a specific format it accepts.

I believe any format should do. It’s just a prompt that goes in first.

Okay. Thank you.

I noticed that with a long context, the cursorrules are not followed anymore in the composer. I just used a simple rule like “Start each of your responses with: Yes, sir.” and after a quite long conversation, cursor simply stopped adding “Yes, sir” to its responses.

How are the cursorrules actually embedded into the llm context? Is it treated as “system instruction” or as “user message”?

Hey, the context window is overflowing, which makes the model hallucinate and forget past questions and answers. You can start a new session or use models with a long context window. However, if you’re using Composer, there’s currently no option for a model with a long context.

Regarding your question about how the system rules work, they’re sent to the chat at the very start of the conversation and not with each message input. That’s why your prompt “Yes, sir” was forgotten and the model doesn’t recognize it.

As an option, you can try attaching the context of your rules file. I think in this case the model will remember the rules, but I’m not sure, it needs to be tested.

I can confirm that the rules are considered when attaching the .cursorrules file as context explicitly. It also makes sense that the rules are pushed out of context when the context window is overflowing. Actually this was my first guess.

As I am experimenting with long contexts to fully generate a project from llm by using multi agents, I need the cursorrules to be followed every time. Would be nice being able to configure the context manager a little bit, e.g. by not simply let it overflow but select the context a little bit “smarter” - e.g. leaving the first 5 messages and the last 10 messages and trim the middle. Does anybody know if features like this are planned? I could imagine that the cursor team expects to get infinite context models quite soon and therefore are not working in this area.

what is this “overflow” everyone is talking about? can anyone show me with a screenshot what this refers too? any help is appreciated.

Currently, all LLMs are limited in input data by the context size. Input data are you current conversation, system instructions (cursorrules) and context files. If the current context is too big for the model selected, cursor will strip down the context in some kind of way before sending the request to the llm. This is called “overflow”.

In my case, I noticed this since cursor forgot some rules I defined in the cursorrules. The most obvious being the “Yes, sir!” rule. But cursor could also forget parts of the current conversation or parts of the context files.

There might be something else going on

as composer just outright refuses to follow instructions

Below are my rules, to basically log the things its doing to separate .txt files, which it should create and then append with each prompt

(neither does it create it, so had to do it myself with like 20 tries, neither mini nor sonnet did it for me ![]() NOR does it append, it just overwrites it, but also only when i ask it to)

NOR does it append, it just overwrites it, but also only when i ask it to)

TLDR

- make file

- append file

Cursor doesnt create the files (if its only one also it doesnt)

nor does it append, only if i ask but then it just overrides

RULES:

Every time you make a change or perform an action based on the user’s query, write and append a detailed description of the change to a file named at the end of Progress.txt.

If it does not exist, create it.

Include in your entry:

A copy and a summary of the user’s prompt that led to the change.

The specific actions you took.

The names of any files created, modified, or deleted.

Any relevant details or context about the change.

Separate each new entry with a new line and insert — between entries to clearly distinguish them.

Additionally, save the user’s prompt to a separate file named Instructions.txt. If it does not exist, create it.

Append each new prompt to the end of the Instructions.txt file, followed by a delimiter “—” on a new line.

Consistency:

Ensure that logging is performed for every change made, regardless of size or importance.

This tracking must occur alongside any other file operations being executed.

Not sure if this helps or even if this is the right approach but i dont use .cursorrules at all. I just use Notepads. Right all my instructions in there and then add it as a file in composer window or even when using chats. it appears to follow the rules I have defined in there. Probably eats into context window but I have a rule that tells the model to stay on focus and to summarize the core subject if it thinks its losing focus.

will try it out and report back to you

thank you datpiff

I’ve been musing on this over the last week or so. Doesn’t that mean that kind of approach could potentially make quite a useful context “canary”?

Could that even become something Cursor could do automatically behind the scenes? Prepend the conversation with “Start every response with CONVERSATION:{some uuid}”, automatically filter that out from the replies, but if responses start coming back without it, show a warning like:

![]() Possible context degradation detected – click for more information

Possible context degradation detected – click for more information ![]()

I haven’t tested the theory yet, but if it works might it potentially be a pretty useful way to know when it’s time to take stock and prepare a new session?