Where does the bug appear (feature/product)?

Cursor IDE

Describe the Bug

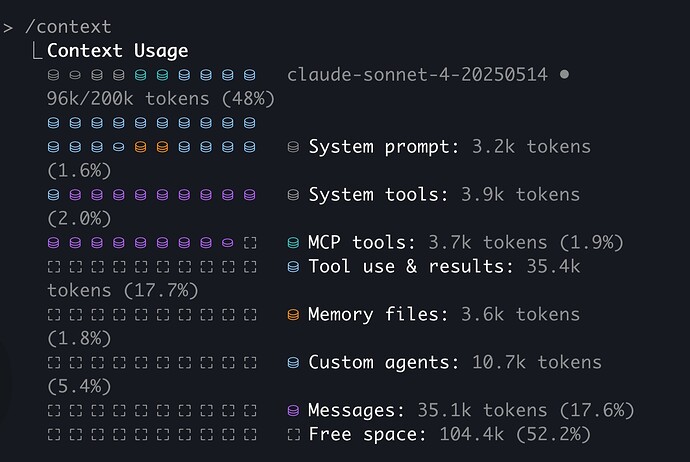

There seems to have been progressive loss of transparency in the context usage indicator in the prompt editor. It used to show at least the rules that were applied, however it no longer does. On top of that, for a 200k context window, Cursor shows 164k is now all that is available (Claude models in particular.)

This lack fo transparency is very frustrating. I have been having problems with the agent not following rules, and I now can no longer even see which rules have been applied. We have never been able to see WHY rules are applied (always, intelligently (and what intelligence!), globs, manually referenced, etc.)

We need insight into how our context is being used. Claude Code offers a /context command. Cursor should not only make sure that the context usage indicator, actually indicates what rules are applied, but we really need a /context command as well so we can see EXACTLY what is using context and why. Cursor spends FAR too much time compressing context, even after just one prompt a lot of the time, when context should NOT be full. The fact that I only seem to get 164k out of the 200k that Claude models are suppose to provide, is also rather annoying. I don’t care if Cursor is using some of that context itself…I need to KNOW that, and how much, and WHY. To take away 36k worth of context token space is insane, when there is ZERO explanation as to why.

Steps to Reproduce

Check the context usage indicator in the prompt editor.

Expected Behavior

Context usage indicator displays rules used.

Context usage indicator should also indicate how much of the REAL context window (i.e. 200k, 270k, 1m, etc.) is used by Cursor.

A /context command should be made available so users can identify exactly what is using context, how much, and why, so they can optimize their usage as necessary.

Operating System

MacOS

Version Information

Version: 2.3.34

VSCode Version: 1.105.1

Commit: 643ba67cd252e2888e296dd0cf34a0c5d7625b90

Date: 2026-01-10T21:17:10.428Z

Electron: 37.7.0

Chromium: 138.0.7204.251

Node.js: 22.20.0

V8: 13.8.258.32-electron.0

OS: Darwin arm64 25.0.0

Does this stop you from using Cursor

No - Cursor works, but with this issue