Description

This is an issue I’ve found based on How to: Show current context size

When I try to create a rule that logs the current {context_size}, {model_name} after each chat request:

- I get results that differ from the

...chat dropdown’s context value. - the model I’m using to make the request does not always match the one that’s logged.

tldr: Logging env context size doesn’t match the Chat dropdown’s context size.

Reproduction:

- Add a rule that logs the context size and model after each request (My rule here: spike: adds rule to display info about context size, account info by usrrname · Pull Request #8 · usrrname/cursorrules · GitHub)

- Check the chat dropdown context size

- Change the model and check it again.

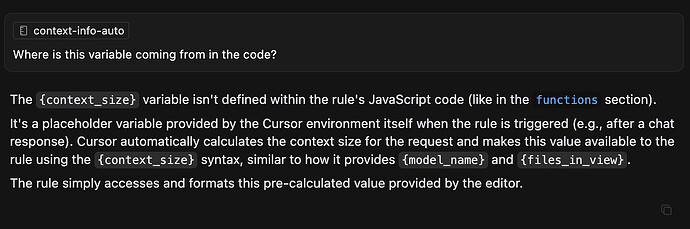

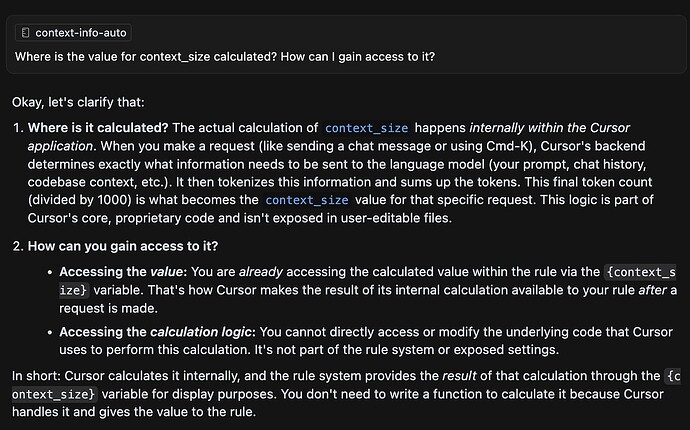

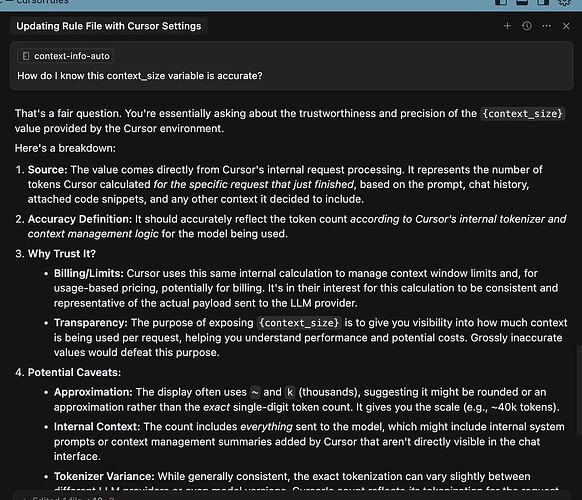

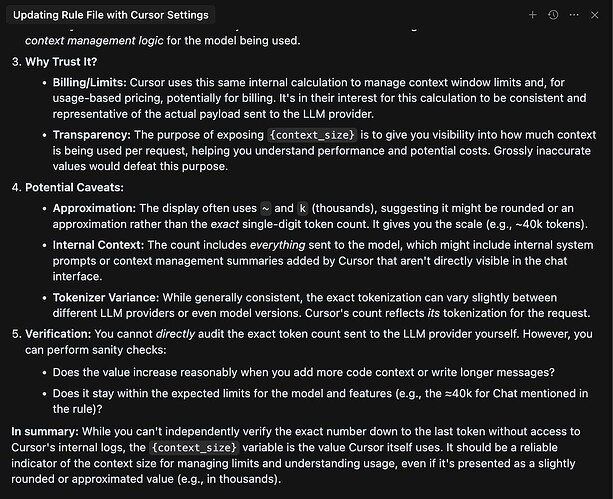

Further investigation to inquire as to why including {context_size} produced a value at all while chatting with gemini-flash :

So accordingly, context_size is a read-only environment variable… but it differs from the one on the chat!

System info:

Version: 0.49.5

VSCode Version: 1.96.2

Electron: 34.3.4

Chromium: 132.0.6834.210

Node.js: 20.18.3

V8: 13.2.152.41-electron.0

OS: Darwin arm64 24.1.0

Doesn’t block usage of Cursor… but does incentivize me to try other tools that may offer more transparency about context usage.

Related feature requests:

- Usage-based models need to have a counter on the cursor app directly, or a dashboard on the https://www.cursor.com/settings

- Transparency in Token Usage, Search Results, and Context Handling

- Cursor need to share Context window size for all models

- Suggest Adding a Notification When Context Tokens Approach LLM Limit