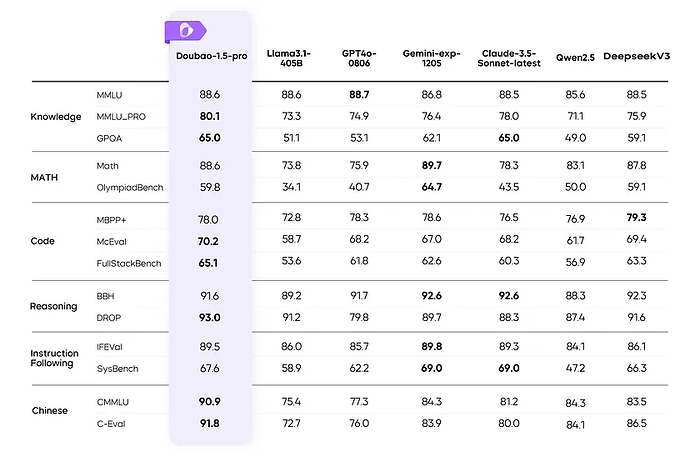

I was just browsing the internet and suddenly came across an article about Tik Tok’s llm from the same company. So I decided to come and show you how things in the daily universe are improving every day. Observe how these companies compete with each other, it’s super interesting so that we can win more and more. They compete with each other and we win with the result they achieve. I’ll leave below the image of a Benchmark

Let’s look at the numbers. The Doubao-1.5 Pro-32k model costs just ¥0.8 (about US$0.11) per million tokens for input, with output tokens costing ¥2. Even the lightest version, the Doubao-1.5-lite-32k, reduces prices even further, to ¥0.3 per million tokens for input. To put that into context, that’s about 70% cheaper than the industry average.

1 Like

Unfortunately, it looks like this model is not open source, so it’s unlikely to be added to Cursor as we’d have to procure 0-data-retention agreements and privacy and security compliance procedures with Douboa directly before it could be added.

I think we need a benchmark for ALL llm model adherence

–

Adherence to privacy, security, limitations, .RULES (Hello_World_.Cursor)

etc…

I dont give a ■■■■ about an Model Efficiency to BLAH…

If a model cannot adhere to directives, and esp. given a context length conversation - that is HORRIFIC.

Let me provide a world example:

Assume you have a compelling case against your Capital Punishment, but your evidence is that you are innocent. But your pile of evidence is larger than the staff on the Agency can afford to ingest - and thus, they kill your clemency, and thus you (your APP)

Yet – you are BRIBING PAYING for them to READ your evidence context

And your application is murdered… (no ~~ needed in this analogy)

If I am wrong, and I will be. Tell me how. EXACTLY – if yuo refuse to teach. Yuou don goof

And … they fail to tell, elab, give, blah.

NPW YOU KNOW what the techBroWorld is ALSO dealing with…

+Now take those layers up a notches…

Saem politics blah

Brings me to URL User Agent Injection efforts – Opertator Agents should be wary of USER AGENT injection poisoning.

Agreed, there’s a good benchmark called Livebench that has IF(instruction following), interestingly we can see that Claude has more or less the same capability of R1 at coding but R1 is a lot better in instruction following which makes it the better model to use, lets hope we get it soon in agent mode as it also shows its thinking thus easier to debug responses.

I have a semi_solution to this

Update, I’ve just read they’re actively working on it: Deepseek R1 Agent - #18 by danperks