I use both lol, but with Claude I know exactly how much I am paying and for what

Have you actually tried using auto? Have you practiced what have you done to try to learn how to prompt better?

Mee too. I don’t get why people just refuse to even try to use auto. Its funny though seeing how high these people’s usage is, some of them getting thousands of dollars worth of usage for 20 bucks.

There’s only Claude and there’s no Gemini and Grok. This limits your capabilities as a developer.

I don’t use this ■■■■, especially grok

I am not seeing any excessive token usage, it all depends on the references that you have added.

If you set it to ask and add a bunch of files, obviously all those files will be added to the context and you will have that token usage.

If you set it to agent and you simply type test, you leave it to cursor to decide what kind of files are going to be added to the context. It may be one or it might be 50. So the token usage is variable depending on your project. I did a test with that “test” chat on agent mode, and some projects were having like a 20k token usage and others 100,000 token usage but that’s what agent does.

I don’t see any bugs here, that’s just the difference between ask and agent.

cursor is so terrible, I won’t use it anymore

A lot of people here don’t understand very much about how llm’s and cursor work.

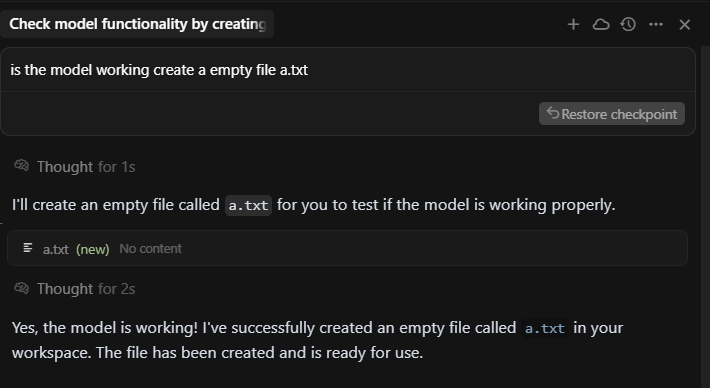

I just asked cursor to create a blank txt file using claude 4 sonnet thinking in a new chat and look at the high token usage costed me $ 0.03

then I asked deepseek r1 0528 to create a html file with content in the same chat and the cost is 0.

There seems to be a bug

current solution seems to use lower model like deepseek v3 or cursor small or even deepseek r1 but it doesn’t make inline edits

There is a bug, but feels like a scam honestly. This is claude 4.1 opus. Message sent is around 2 parragrahps. Thats the response. And thats the edit. 1 line. This is what it just appeared in usage

Hi, it looks like this is an ongoing chat/conversation that is almost at the context limit (91% full). Anthropic models have a 200k context limit (168k input, 32k output). Sending followups in chats that have been filled to the context limit can use a lot of tokens, regardless of how much text is in the text box.

I’d recommend creating new chats often to manage context windows, especially when starting to fix new bugs or implement new features.

If the context of a previous chat is relevant, you can use @name of other chat to pull a summary of the other chat into the new chat.

Claude Opus is also expensive, it charges $18.75 per million cache write tokens. For most tasks, I’d recommend using Sonnet (which is 5x cheaper) unless the task really needs the extra intelligence from Opus.