While I’m excited to hear about the new pricing model, it’s missing some key components that I hope you’ll address soon.

1. Transparency Issues

The new pricing model lacks the transparency of the old system. Since the change has been live for a a-bit now, the lack of documentation is disappointing.

We need clear information about:

- How you determine when to rate-limit users

- How long rate-limiting lasts

- How different models impact rate-limiting decisions

2. Value Proposition Concerns

A key reason I brought my project to Cursor was the unlimited slow requests feature. Removing this significantly reduces the product’s overall value.

My situation: Earlier in my project, I used Claude Code exclusively, spending thousands per month. With your new pricing model, my options as an independent developer are limited to either:

- Stop using the service entirely

- Drastically reduce usage

Even though I didn’t heavily use slow requests in the previous model, my costs still felt manageable at $100-200/month versus the thousands I was spending before.

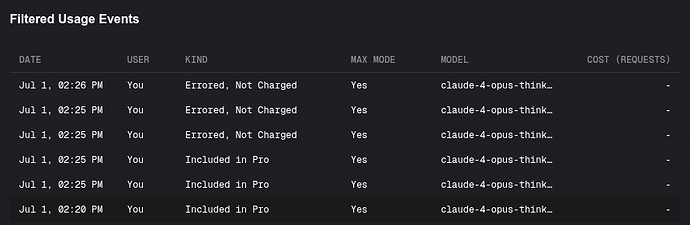

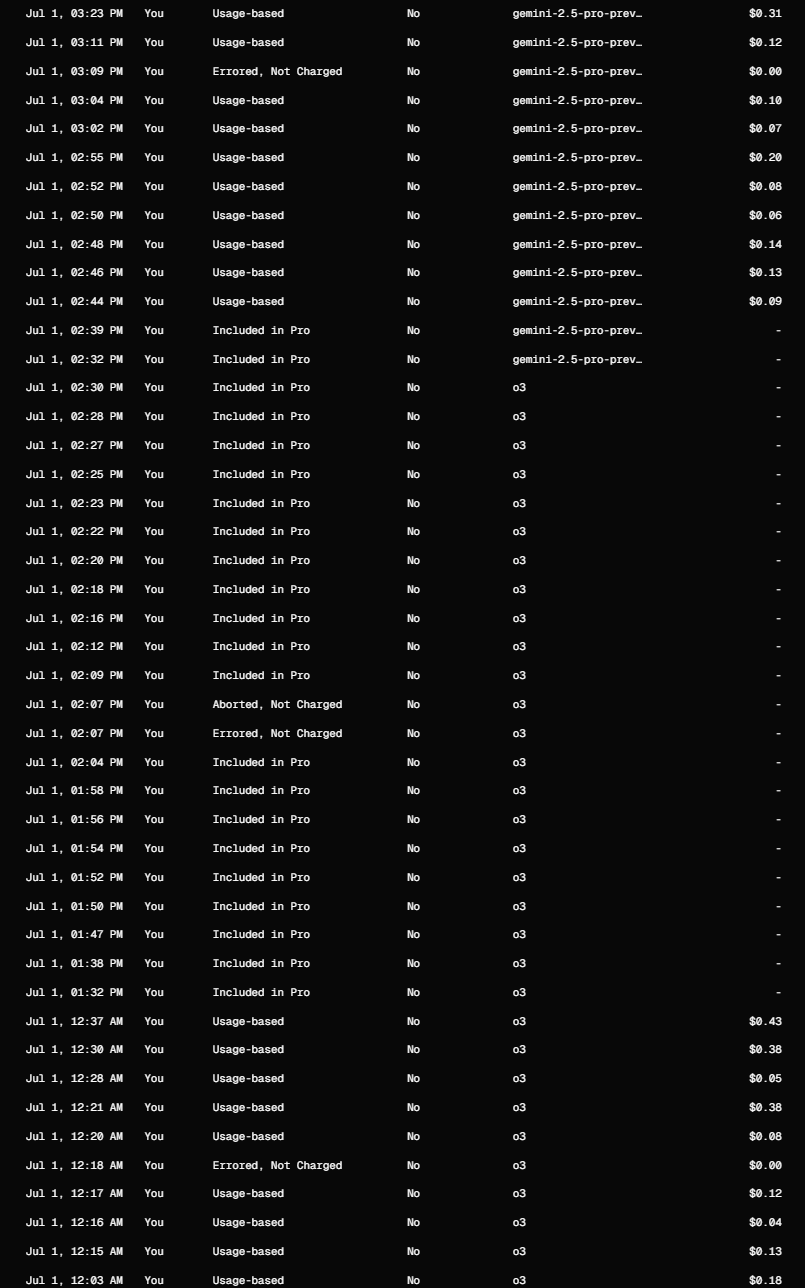

3. Suspected Cost Increases

This may be speculation, but it strongly feels like pricing has been adjusted upward for the new plans. My month-over-month analysis of the same model shows increased costs for similar requests.

Final Thoughts

I understand you need to make changes to stay competitive—other AI firms have rolled out similar high-cost plans. However, for independent developers without enterprise budgets, this new system desperately needs better transparency.

Please update your documentation and supporting processes to clearly describe:

- How you calculate billing

- When charges are applied

- How requests and tools contribute to usage costs

Until these transparency issues are addressed, I’m opting out.

Edit:

Claude 3.7 Extra requests vs Claude 4 extra requests x5 increase with new plan. Obviously not a 1:1, rates went up, part of the Screencap requests were on the old system, etc..