Describe the Bug

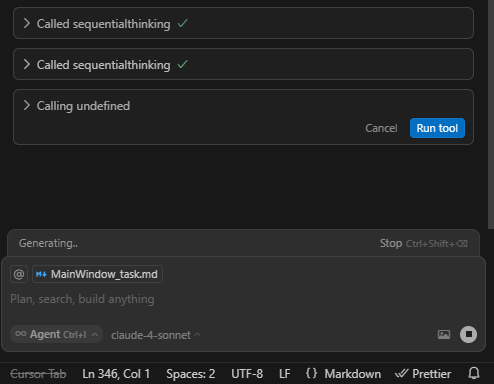

I recently observed that Cursor is having a hard time calling the correct tool. It would show ‘Calling undefined’ before showing the correct mcp server tool after few seconds (sometimes reaching 10 seconds of waiting or more). If you click ‘Run tool’ while it is in Undefined state, then cursor will just freeze in waiting mode and I just have to stop the chat when that happens.

Steps to Reproduce

I primarily uses Sonnet 4 and I observed this behavior while using this model.

In a new chat, write a new prompt. Say “I need you to investigate the cause of the issue in our dashboard, use sequential thinking mcp server”. But it is not limited to this mcp server. Every mcp server I had has this issue. It will then attempt to call the tool but will first display ‘Calling undefined’ before displaying the correct tool and you can now run the tool.

Expected Behavior

Previously, I don’t have this issue in cursor. When mcp servers are called it will instantaneously show the the tool name and be able to run with it. No more waiting time, no freezes.

Screenshots / Screen Recordings

Operating System

Windows 10/11

Current Cursor Version (Menu → About Cursor → Copy)

Version: 1.2.4 (user setup)

VSCode Version: 1.99.3

Commit: a8e95743c5268be73767c46944a71f4465d05c90

Date: 2025-07-10T17:09:01.383Z

Electron: 34.5.1

Chromium: 132.0.6834.210

Node.js: 20.19.0

V8: 13.2.152.41-electron.0

OS: Windows_NT x64 10.0.19044

Additional Information

I observed it when using Claude llm, primarily sonnet 4. I have not made intensive testing to this model or others as it would waste tokens, a luxury I need to save.

Does this stop you from using Cursor

No - Cursor works, but with this issue