In documentation there used to be a table with models and its prices or request usage per model.

Where can I find that?

I just need to know how each model consumes request usage so I can plan which model to use to optimize token/request usage.

It’s also gone ![]() Transparency, it’s not their main attribute

Transparency, it’s not their main attribute

They also seemed to have clipped the request counting API - that chrome ext and VScode ext don’t work anymore.

how is trae? might try it out, have only been familiar with windsurf as an IDE and on the waitlist for the AWS one

me too

Everyone knows that Cursor has to pay Anthropic $15/1M output tokens and $3/1M input tokens. With $20, I think it would only be enough to send a few requests.

This poses a serious challenge for Cursor, which introduced the $20/month plan to attract users while hoping that Anthropic would lower prices in the future (which didn’t happen).

Charging based on API usage would be fair for Cursor and help prevent bankruptcy, but it’s hard for users to accept-especially when the AI consumes a large number of tokens without producing useful results.

I think the best solution for now is to gradually increase the price and optimize how Cursor uses tokens. Then, they should develop a specialized AI model for code generation (similar to what Vercel is doing with v0).

VSCode + GitHub Copilot and Anthropic + Claude Code are likely the future, as they truly own the AI. Microsoft’s obstruction and Anthropic’s unwillingness to lower prices could really bring Cursor down. I think immediate action is needed-of course, without driving all users away in the meantime. ![]()

xAI’s Grok4 + Cursor.

Github Copilot refuses to enable xAI models.

Anthropic doesn’t have an IDE and position itself like IDEs won’t exist in a year

In reality, Anthropic doesn’t need to develop an IDE at this point (I don’t think it would be difficult for them). There are already quite a few tools like Cursor burning cash on their behalf. Imagine if they also built a customized version of VSCode like Cursor-what would happen?

- If they price it higher than Cursor: no one would use it.

- If they price it lower than Cursor:

• If no one uses it → building the IDE is pointless.

• If many users switch from Cursor to Anthropic-Cursor → they would earn less money for more effort → still pointless.

So I believe that, for now, it’s completely reasonable for them to just maintain a CLI to serve developers who don’t like IDEs.

When would they build an IDE? Two scenarios:

- When companies like Cursor run out of money and can no longer sustain their losses-possibly leading to Anthropic acquiring them at a low price.

- When other code generation models reach the same quality level as Sonnet but at significantly lower cost-in that case, Anthropic might launch an IDE with ultra-cheap Sonnet access or lower the Sonnet pricing for Cursor.

In any scenario, it’s clear that the real game is controlled by those who own the LLM.

The only viable path for Cursor to survive is to develop its own cost-efficient code generation model. ![]()

Do you remember when Anthropic gave Cursor a discount on Sonnet 4? The actual cost was 2.5 times higher.

Everyone rushed to use Cursor with Sonnet without giving any thought to optimizing context.

Then Anthropic stopped the discount → Cursor users suddenly found everything unacceptably expensive → drama ensued ![]()

Nope, cursor could just reflect the real model costs to the user - say 70% of API pricing (30% to burn).

You can go a long way with Gemini/o3 for planning, and sonnet 3.5/Flash/Deepseek/Kira/Qwen for coding.

But they prefer to deceive users.

Claude Code is a joy, but also based on burning lots of cash. This will change later. Possible twist: Their models are optimized and much cheaper now.

I’m getting absolutely decimated with this new pricing model. Can you roll back the Cursor build and even roll back the LLMs themselves back to 3.7 for Sonnet? Or was the whole intent to charge your users $1000/day per user? I literally hit $80 in token spend within like an hour or two.

I genuinely believe Cursor devs are building Cursor with Claude Code or Cline, otherwise, they would’ve brought this up to stakeholders.

Switch to copilot+ vs code, almost the same. Cursor is almost dead, there is no going back for them from this.

where can I find the 60$ pro+ plan? I cant find it on the website ?

Unless you’ve already subscribed, I don’t know why people are still using cursor.

Just use their competition until finance realises they’re killing the company.

Their “Claude 4” ids as Claude 3.5 and it shows, Trae and Augment are currently performing better. Cursors pricing model ■■■■■, don’t reward bad behaviour or you’ll just get more of the same.

SO IT IS A BAIT AND SWITCH? I have the receipts and sources to back this up from Cursor Founder Michael Truell. It looks blatantly like Cursor without much public discourse or acknowledgement set up a new set of plan terms (not just pricing) that moved all pro/ultra users from the guaranteed request based plans that we signed up for (some annually) to compute limits plans based on direct API usage with only $20 or $60 to spend + a subpar auto mode. This is why everyone is burning through their tokens so fast, it’s API fees not request limits.

It’s also likely that there is a huge spike in cache read / write tokens that is further spiking up costs for everyone as clearly validated in this post: Unofficial Community Discussion to Give Fedback on Plan Rates - #7 by mokhir

So without really making sure people knew, they silently pushed people to a plan worth 10% of usage value of the plans we originally signed up for and were told we would have in our sign up invoices..

Michael Truell, the founder is acknowledging this here:

Link: Updates to Ultra and Pro | Cursor - The AI Code Editor

Interestingly Michael also said that “Existing users can choose to stay with the “500 request limit” method if they prefer”, which is what we are all asking for to actually get the same amount of requests we’ve already been paying for.

I for one, will require that as an existing user I stay on the 500 request limit method permanently, as offered by Michael.

I assume this is why the staff is refusing to answer the basic question as to why people are burning through their tokens so fast and being billed hundreds to thousands of dollars in usage fees. The reason is because they apparently chose to bait and switch their users to a plan with 10% of the usage value. This is why they will justify the cache read and cache write as being accurate, but always skip over answering why people have 10% of the usage they were paying for and contractually obligated to.

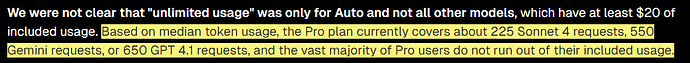

Additional Michael is still claiming that “Based on median token usage, the Pro plan currently covers about 225 Sonnet 4 requests, 550 Gemini requests, or 650 GPT 4.1 requests, and the vast majority of Pro users do not run out of their included usage.”

Link: Clarifying Our Pricing | Cursor - The AI Code Editor

However everyone I’ve seen complaining is only getting 10% of those claimed requests amounts. If we actually got those request amounts that would have all been fine, but to be left with 10% of what we signed up for and paid for is deceptive.

Speculating an alternative story here, I believe Cursor devs do dog-food their own product.

That’s why they haven’t caught the latest developments in Claude Code, or noticed just how expensive Cursor became relative to the tools that came out in the last few weeks.

Internally, they definitely have a admin-pool of credits, so their Claude 4 calls are free - and the engineers don’t need to economize like use - and by not economizing they don’t feel the pressure we do, and let this whole thing slip.

Pro user here. Never had a problem in the old plan.

The new plan? I hit 75+% of my usage a day into my new billing cycle. Excuse me? What am I supposed to do, not use it for a month? What am I paying for then? I could save money by just cancelling my account because it’d be the same thing as what I’m doing now.

Despite doing that side-by-side and finding the 260% difference is cost, I don’t believe their doing anything intentionally malicious.

I speculate Cursor’s system prompts, and tools calls for their Agent that use Claude 4 is less optimized than Claude Code has them.

Claude Code devs can just hop on a call with the Anthropic model team, and directly ask questions on how to optimize stuff. Cursor won’t have that level of access.

Cursor’s sys prompts and tools calls have to work for a multitude of models, and they will always be one step behind the Claude Code internal team regarding secret news and updates.

It’s my belief that if Cursor purely offers Claude 4, it will always be worse than Claude Code offering Claude 4.

The only thing that Cursor has a competitive edge on is doing stuff that Anthropic can’t - like mixing models during calls. However this idiotic model caching system that Anthropic provides off the shelf locks in the Cursor to using ONLY a single LLM provider per agentic chat. because relying on THEIR cache means you cannot switch to Gemini mid-call sequence without repopulating the cache.

I wish Cursor would design their OWN cache, or honestly, get rid of relying on a vendor lock-in cache anyway - and design a Agentic coding experience that uses smart models for certain turns, and dumb-cheap models for the edit_tool turns.

Also a limitation of the ‘responses’ LLM APIs are that they lock you into a long chat and you cannot switch to other models mid-chat.

I’d just build it differently. No more using a single fixed LLM per Agentic Chat

Why anysphere don’t do anything yet when customers complaining new pricing? It’s almost a month passing… (from July 5)