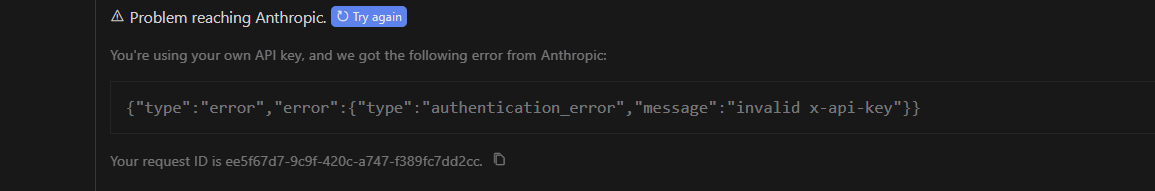

This is strange. In theory, when I enter my custom OpenAI API base address and key, it should call the Claude 3.5 Sonnet within it. However, in reality, the request was sent to the Anthropic API

I encountered the same problem.

I encountered the same problem!!

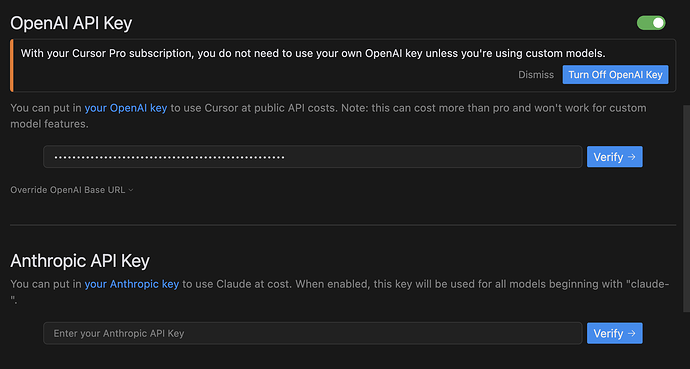

the problem is it is using the topmost active model from the selection above to verify the key. if you disable any non openai model temporarely it works.

Edit: found this related post with an apparent “solution”

I have the same.

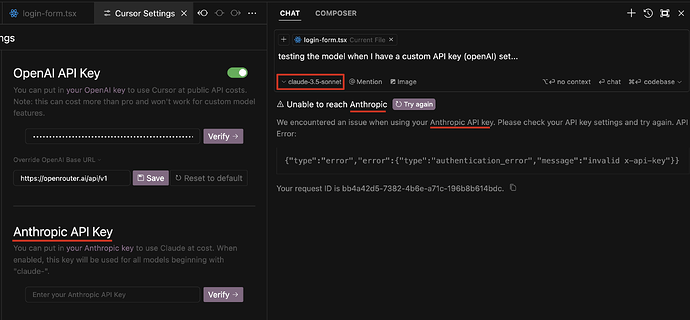

@DerKorb I tried swapping the order of the active models by toggling on/off to get my custom model name listed below claude, but it didn’t solve the issue:

Not even having only claude as the single enabled model worked. It will only work if I disable the custom API key:

Um… I want to confirm if we are experiencing the same issue.

I mean, I want to use the Claude model through platforms like OpenRouter, so I enabled the custom OpenAI API. But all my Claude model requests are inexplicably sent to the Anthropic API, instead of OpenRouter.

I once tried using a third-party project to customize the Claude model name to something not starting with claude, such as code-3-5-sonnet. This solution worked before, but recently it completely failed…

Rather than having to rig it using the OpenAI stuff I wish they’d just let us define the API URL and request format per-model so we can add and use whatever custom models we want without the extra hassle or having to disable every other model ![]()