I subscribed to cursor a few months ago, because of the unique context feature and easy gpt-4 usage. Today I finally cancelled my subscription after struggling the whole day with the ai. I tried everything, just defining codebase as context, specific folders, specific files, everything. It loses context every single time. I am sick and tired of the „Unfortunately, without the specific details of your codebase…“ messages. The normal gpt 4 chat website does a way better job than cursor. As other users stated too, it is totally unusable even with the most trivial problems. This is not what I subscribed to at all.

I bought an annual subscription after spending only ten requests on the fourth model, that’s how good the cursor was

Now I find answers faster in Google than trying to get them from the cursor

I bought an annual subscription after using the app for a day. It felt good, I completely stopped using the web version of chatGPT.

However over the time it seemed like context issues started piling up. The quality of the answers dropped, the amount of times i needed to start a fresh chat for the same problem increased. The final nail in the coffin was a strange decision to tweak quick actions menu of the IDE.

Probably will cancel my subscription in the following days.

I have the same problem. Cursor has turned dumb.

Apologies about this! We definitely want to fix this issue.

We’ve heard several complaints about the model being dumber but aren’t yet sure what the cause is. Our best initial guess is codeblocks aren’t getting included in the chat context when users expect because of especially long conversations or long code files, etc. But really not sure.

If you have specific screenshots or reproduction steps (does this happen in new chats?) that’d be super helpful.

I am sorry I do not have a screenshot to share but I have tested this. It may not be because of just the long conversations. That was my initial guess as well. But, I noticed context being missed in fresh chats or the context provided in the last message in a long conversation. Again, no empirical evidences, sorry, but my feeling is that last month Cursor was more “intelligent”. I never noticed missing contexts that I started noticing in the last ten days or so.

Quick update: we deployed a couple of changes yesterday the prompt prioritization that we think could have caused some of this.

If people have updated screenshots from the past day or two that’s super helpful in debugging any remaining issues with chat performance.

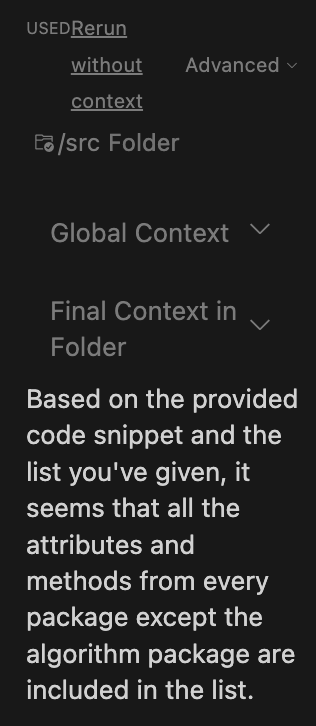

The error still persists. These screenshots are after from today. These “errors” occured after a few chats in the same codebase. The LLM is asked to check approximately 2000 lines of code for discrepancies. The code is parted in about 20 files. I tried running with cmd+enter with the same results.

Hi! Definitely want to fix this. If you’re not on privacy mode, would you be able to hit the “thumbs down” button right below the assistant response? That would help us investigate the response.