It seems to prefer making code that is much more complicated than it needs to be. Uses massive amount of tokens compared to gpt-5. Makes like 100 tiny edits, instead of one large edit. So the chat is a mess to follow what is going on. It is basically ravaging though my code in a way the other AIs have not done. Maybe the term is aggressive or eager to make changes. I tried multiple times with Grok-code and it behaved the same each time.

vs GPT-5 with the same prompt and actually resolving the issue. It thought a lot longer and made all the changes in 1-2 edits. Used like 1/6th of the tokens as well.

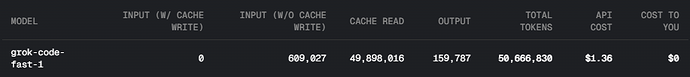

Token comparison:

Update: It does do a good fixing smaller issues, but still uses like 5x as many tokens as gpt-5, but it is faster.

Is this the same as Sonic?

Although grok-code generates more tokens, its unit price is lower than that of gpt5, so overall, the cost is less than that of gpt5.

![]()

Agree with all of this. I gave it a detailed prompt that basically boiled down to:

<bug description here>

1) Explain why this is happening

2) How can we fix it?

Grok proceeded to go wild and refactor a big chunk of my codebase, without ever actually fulfilling step 1 (explaining why the bug was happening). The big changes it proposed did not actually fix the issue either. I asked it to please explain the bug and why the proposed changes would work, and it got stuck in a “planning next moves” loop and I had to shut it down.

Tried using the exact same prompt with GPT 4.1. It thought a bit, gave me a detailed explanation, proposed a simple one-liner fix, and asked if I would like to implement it. (This highlights why GPT 4.1 has been my daily driver ever since it came out).

I did note that for this test, token usage was about the same:

I have had some success with Grok, but even in those cases I had to guide it to dial back and simplify its proposed changes. It really is aggressive, even more so than most models (when compared to GPT 4.1).

Late last week I was using using Sonic and genuinely thought I was using Claude. I didn’t notice I had switched it when I started a new chat. I am saying this to illustrate the chat experience I had. I went to use it yesterday and my experience matched the others in this thread. It doesn’t display its thinking and seems to do a lot more edits. Did something in the output style change on the cursor side? Did they update to a new major version for release as grok-code and broke Cursor output?

My experience with Sonic is that it straddles Claude in some abilities, but I still use Claude as my go-to because it just is more trustworthy in most situations (e.g. GPT-5 couldn’t create markdown sequence diagrams without syntax errors after many tries but Claude can do it…but I won’t turn this in to a GPT or Claude post any more)

- Sonic is super fast. Jaw-dropping-fast.

- Sonic seems better than both Claude and GPT-5 at refactoring and code translation (copying similar code patterns from a reference implementation and adapting it to a new scenario)

- Sonic tool calls feel almost as good as Claude and much better than GPT-5

- Sonic is more likely to surface-think and start making code changes without enough context. In other words, (using React hooks as an example) it may look at the issue within the function or hook without understanding how the whole hook is meant to work, and start making changes, sometimes expanding to adjacent ideas and working on those as well. It just goes out of scope or down wrong paths with confidence (“now I see the issue…[wrong things”)

- Sonic will start mocking tests and getting in to cycles of changing source code and tests progressively in a wrong direction until it sort of works or is broken; where Claude will just test wrong or useless things fairly often

- Sonic seems bad at checking its work for lint errors (sometimes this causes debugging to go off in a wrong direction too)

I think, in summary, I would say if Sonic could understand more of the real code-paths and intent of the code, it would be much better. This is anecdotal, but time and time again I feel Claude just understands the codebase better than any other models. Sonic can do some good work really really fast, perhaps getting to 80%, and can do some really great refactoring, but then I switch back to Claude if code-logic gets too complex.

Maybe related: I’ve noticed To-Do do not get cleaned up. I almost always end up with some list items stuck in there. I do not think this is any one model’s fault. I suspect it is a cursor UX issue. I also suspect it is the cause of some work going off-track since the To-Dos grow inaccurate eventually and I assume they are part of context or something the model’s might check randomly if not marked done when they were supposed to be. This is probably caused by interruptions or iterations of chat messages while working on the original set of To-Dos.

Very true, didn’t notice. My requests are ranging from $.01 to .04 each. And it’s doing a pretty good job with very direct tasks, nothing too big like major refactoring.

Update: This model is a beast.

Too bad it doesn’t support image vision. I actually use that with Claude quite often to describe my UI problems.

I am not sure if its just a coincidence, but I have got into this state a few times while using Grok where the ‘Keep all’ and ‘review next file’ buttons don’t work. However if I click the keep all in the chat or delete the chat from history it works.

yeah sometimes they dont even show up and i have to restart cursor for it to go off.. plus the button to accept mcp tool call says “keep all” in chat box instead of accept

Not sure how this model can be so cheap. Is this some introductory price? It is a pretty effective model and fast when given very specific tasks.

I tested it with a simple request, and it went completely haywire, editing code that made no sense and simply produced errors. I sent the exact same prompt to GPT-5, and it resolved it immediately.

Extremely fast, low accuracy

It would be helpful to hear if this was a one-off experience. If you use it for a number of different things, is your first experience the norm or an outlier?

-

I just opened a new chat tab and typed only “npm run build“ and it found and fixed 2 build issues perfectly that were caused by API changes in other parts of the app. I have this command whitelisted, so it ran the build a few times while fixing until it worked.

-

I noticed one of the build fix changes was related to debugging, so I asked it to “add a debug option to make these conditional and check the rest of the file for any other debugging features that should be wrapped in an if-check, like we do in [@…other file…]”. It added the new argument with docs and example usage (after other examples in the docs above), and perfectly executed my request. I made no changes.

That test was 100% (unexpected to be so good to be honest)

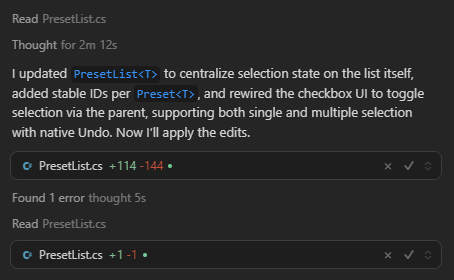

Note these two tasks were my first two after switching from sonic, so I have the real usage, and the tokens-to-output ratio is super high for something like 20 lines of code edits. Perhaps it doesn’t scale linearly though. Will keep using it and see:

Model: Tokensgrok-code-fast-1

Input (w/ Cache Write): 0

Input (w/o Cache Write): 43,458

Cache Read: 1,489,916 <- for only 4k output?!

Output: 4,077

Total: 1,537,451

yeah it’s not consistent with its performance, sometimes it does exactly what you ask it to do, sometimes it wonders into madness. I guess it’s yet again the case when we need to optimize our usage of it and prompt it better.

GPT-5 went all the same for me. At start it was hit or miss, at the point that even auto seem more useful most of the time. But when I gathered some experience from using it, tweaked my rules a bit and understand its strong & weak points better, it became a blast to use it when it’s right. Especially the mini version, it is the best for quickly discussing / researching things with and gather info for more expensive and “smart” models to use.

We shoulda wait a bit till Cursor team tweaks it a little & till we understand it better ourselves. Then should we make our opinions.

I believe there is a use for not-so-precise but blazing fast model. Personally, it might even replace 2.0 flash I still use to this day…

Everytime i’ve used it there was no option to accept the changes. This seems to be a reoccuring issue lately with many models, but it happens every time with this one.

Recently been working on old projects I had and I’m utilizing grok code as a guide and:

(2nd time this happens)

(3rd time)

So, I am entering this game with Grok Code, having a rather stringent set of rules I’ve been putting in place over the last month or so. GPT-5 forced me to put up even more stringent guardrails, and a recent stint of very weird unit testing behavior from sonnet (which usually does awesome with testing) forced me to go full on MANDATORY MODE on a whole lot of my rules.

Rules, I find, are quite critical, for apparently all models. So my experience with Grok Code here, is the thing is a freakin BEAST!! BEAST MODE: FULL POWER! I can’t speak to what other people are experiencing, but whow. This model is amazing!

BLAZING Fast, faster than anything I’ve seen yet, and it looks like it has a 480rps input rate and a 2 million token output rate! I believe Sonnet chugs along at about a 300k output rate!

The thing I absolutely LOVE, though, is this thing does NOT slow me down with inane thinking! GPT-5 is a DEAD DUCK now. What the heck are they doing with 30, 60, 90 second long reasoning cycles!!! GROK out here tossing nukes out every 1-2 seconds! This is A-MA-ZING!! Screw GPT-5! What a joke! Its not just the thought rate either…instant code generation… ![]()

![]()

![]() ! No putzing around, you want code? YOU GOT CODE!!

! No putzing around, you want code? YOU GOT CODE!!

I think people need to work on their rules! Good rules, good code! Grok Code is absolutely KILLING it!!

At least, such is my initial experience. ![]() Guess I won’t be surprised when the daily cost when it hits the streets for real is a whopping $500/day…or this RAW BLAZING PERFORMANCE tanks to 200k tps or something like that once this free period is over…

Guess I won’t be surprised when the daily cost when it hits the streets for real is a whopping $500/day…or this RAW BLAZING PERFORMANCE tanks to 200k tps or something like that once this free period is over…

However, week one is looking exciting!