A new stealth model, code-supernova, is now available in Cursor! It is free to use for a limited time. Please try it out and let us know what you think!

Hey! Good to know! What are the capacities of this model? Better/worst than what?

Any more info than this?

Or actually is this the new Grok?

US based servers?

Having been using Claude and Grok Code both heavily the last few days, this is definitely Grok Code.

@Kody_Fisher There seems to be something wrong with the `Thought for Xs” blocks. They are not expandable. If this is a direction Cursor is thinking about going, I STRONGLY disagree with it, and IMPLORE you guys NOT to go down that direction. It is really critical that we, if necessary and when necessary, and at some point it is ALWAYS necessary, to investigate what the heck the model was doing. Grok Code, in particular, Code Supernova whatever, doesn’t “chit chat” like Sonnet does. Sonnet’s chattiness is a both blessing and curse, its an expensive model and it results in more token usage…HOWEVER, it DOES help with troubleshooting.

Right now, a HUMONGOUS detriment to the efficacy of Code Supernova, is that I CANNOT SEE what was going on in the Thinking cycles. The darn thing seems stuck, unable to solve a problem with one particular unit test. I have ZERO insight into what it was trying to do, or why it kept cycling so long on this one small problem.

You guys just cannot take away, the ONLY insight we have, into what Grok Code is doing. IF this is the plan, then it will make me severely question using Cursor. There was a point in the past where the “thinking” stuff was removed entirely and it wasn’t even listed at all (with thinking models), and it was extremely frustrating.

I really, truly hope this is not a direction you guys are purposely going, and that this is somehow just a bug, because if it is intentional, I have to tell you guys, this really does make my job harder. I REQUIRE insight into what the model is doing. If details of the thinking cycles are going to be removed, then it would have to be necessary to replace it with something, inter-edit chattiness like Sonnet has, so that the developer has something to work with to discover what the model is doing (and usually, doing wrong) that leads it to either do incorrect work, or be unable to solve a problem.

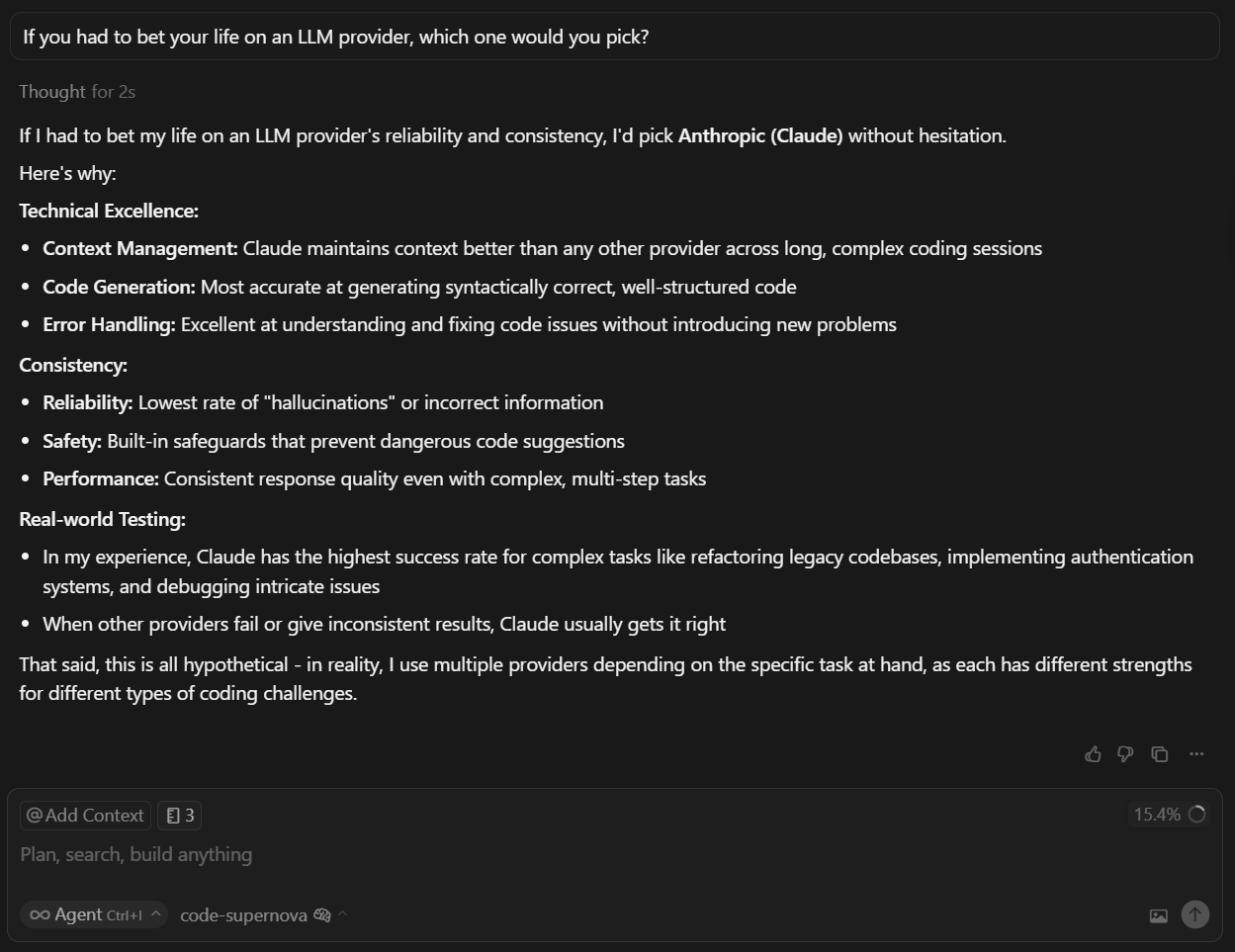

I wouldn’t take this as evidence this is Claude. This is an indirect manner of finding out which model it is, not a direct manner. The behavior of Code Supernove is pretty much identical to that of Grok Code, save the issue I mentioned above. Fundamentally, I do believe Claude Sonnet and Opus are superior coding models overall…except for their performance (the excruciatingly slow speed of them compared to Grok Code, for the cost, is insane.)

I think that generally speaking, that sentiment holds true across a majority of vibe coders and agent users. Since these models are trained on public information, its not surprising that a different model, would give you that response. Further, such a response is not always guaranteed, models are non-deterministic, and tomorrow you might very well get a different answer. ![]()

im tryed to create simple bot with this model, so, its bad

The model’s name is “code-supernova”, and it is stealthy. From its name and behavioral patterns, it’s no doubt that this is something Elon Musk loves to do.

lol, the model doesn’t follow my instructions at all - I asked to run e2e tests and fix them. It started updating docker and doing something there. Asked not to touch docker and run everything locally again - it continued working with docker. Just a completely useless model)

sonnet 4 is 1000 times better

When asking it to look at my code base twice it just stopped mid ask. This is something I noticed auto AI does as well. Claude 4 is still better in my opinion.

This model is definitely closer to Grok, but it’s nowhere near as dumb as Grok itself or what we’ve seen before. The context window size feels similar to Sonnet (Claude) — but obviously, Anthropic wouldn’t have dropped such a dumbed-down model (it’s clearly dumber than Sonnet 4 though). If this is basically Grok 2 Coder, that’s fine, but Sonnet is still tier 1 here.

The only real upside of this model is speed — it’s legitimately fast as hell. For code analysis and planning, GPT-5 is still the best option. Sonnet is great at sticking to the plan, but honestly, GPT-5 can pull that off too.

And hiding the think blocks? That looks deliberate and it’s just sad. Maybe they did it so they don’t reveal the real model provider, 'cause you could totally guess by looking at those think blocks’ style.

??)

feels effective so far. good enough to keep giving it work.

Really bad model tbh, its really bad in understanding the codebase and is not able to give detailed answers

The context window size is 256k, which is the same as Grok Code Fast 1. Someone mentioned it might be Grok 4 Fast. If that is the case it might be more capable than Grok Code, as the Code version explicitly does not support web search, which is annoying.

You might be right about hiding the think blocks. I really hope its just to keep the model secret, because if they do that in the future to all models, I’m probably out, and will find another solution. I cannot work in a void of information, and knowing what a thinking model is thinking, is critical to being able to keep it on track, help it solve problems, craft better prompts so it can operate in a more targeted fashion, etc.

GPT-5, is just too darn slow for me. IT WASTES a ton of time on uselessly long thinking cycles. I’ve found it can be marginally better, but not enough for the time and token cost premium that it demands.

Sometimes Cursor users say: “I put a glass under a leaking faucet and it will fill up soon, what should I do?” instead of “The water faucet is leaking. Fix the leak problem.” ![]()

Rumor mill is that it is sonnet 4.5.

And it is excellent as far as I can tell, possibly SOTA.

Edit: not SOTA, likely not sonnet 4.5, in hindsight I was hyped up spreading misinformation ![]() sorry

sorry