Let him cook!

This is a simple philosophy lesson that may or may not apply to you, but let me know if you find it useful.

Your LLM model is a cook. Your own personal chef. You give it a kitchen, utensils, ingredients, recipes and an order. He needs to be familiar with the kitchen, which needs to have utensils and ingredients where needed, and should know how to use all appliances. The recipes he should be familiar with before entering the kitchen, but you may ask him for a specific style. The order, is very specific both in recipe variation, timing and delivery. But this should be no issue if the cook is vetted, the recipe is time-tested, the ingredients are of good quality and freshness and your order is reasonable.

Here’s where you make mistakes:

- Your order is too vague or very different than expected, the cook will have problems delivering on your expectations if you can’t explain or explain incorrectly. Clear recipes in the book are no issue for the cook, the problem arises when you ask between pages, outside the book or too much it becomes slop. This is on you to fix on your vibe coding approach towards CONTEXT ENGINEERING.

- You change the cook on the fly. He started cooking, and then you bring a different cook, which has no idea of your previous conversations, he only sees some half-cooked food, some of the ingredients, doesn’t know where the utensils are, has no idea when you want each order and what spices to use. This is on you to fix, NEVER CHANGE CHEF IN THE KITCHEN.

- You provide incorrect utensils or appliances, the cook will attempt it’s best effort but results might be unexpected or useless. If you want to prepare the kitchen as best as possible always ask the chef to CREATE THE RULES OF THE KITCHEN.

- You change the recipe on the fly, while the cook is cooking, you ask for something different or completely opposite of what it’s cooking, now the cook will try to salvage some progress but the result will be inefficient because you CHANGED THE REQUIREMENTS.

- Identical behavior to changing the cook on the fly, is moving the cook to a different kitchen on the fly. He will be fighting with everything and will attempt to slowly align the ongoing cooking based on familiar patterns, because you need to ALWAYS START FROM ZERO A NEW FRESH PROJECT.

The best results will always be when the kitchen is prepared as it best fits the cook, you don’t ever change the cook, you provide the best ingredients, and a clear order to cook, with no leftovers available.

If you did everything right, a single complete prompt will auto-run until the cooking is finished with success. A well defined explicit workflow, feature steps, rules and documentation will allow the cook to deliver 15000 lines of working code that completely and correctly implement your specs.

I won’t give you explicit model selection, rules files or strategies other than ASK THE AGENT first.

- You don’t know which model is best? Write a small feature sample from your large projects and ask each model.

- You didn’t get the results you wanted at a specific step or at the end? Ask the model why.

- You can’t solve a specific issue, obtain a list of mitigation approaches by asking the model how.

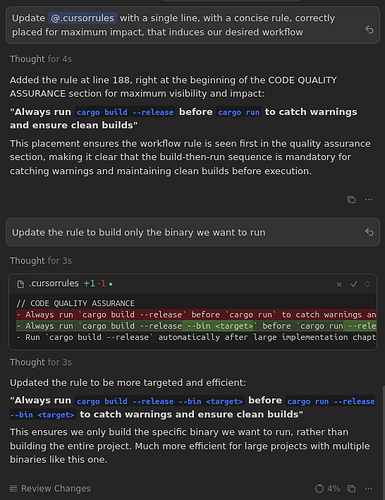

- You need a new rule for the project, something that keeps bothering you or breaking things, create a rule by asking the agent.

- You created rules, but they are not respected, you can always rewrite or emphasize them, ask the agent how.

Shift your approach from slop remastering to one shot cooking. You are not building with bricks or legos, you are building with high volume depositions. This is a 3D printer, not a machine workshop. You one-shot your project or start over again. Instead of fixing a faulty, broken, outdated or misaligned printed piece, you throw it away and print the corrected version.