Experiment overview

I create two git-branches, from the same parent commit.

In git-branch A, I input the two turns of chat message into Agent mode Cursor + Claude-4-sonnet

In git-branch B, I input the same two turns of chat message into Claude Code

I compare the difference, the before/after in costs for git-branch A vs git-branch B to do the feature implemenation.

Start Time: 07-22-2025: 6:22pm KST

- Privacy Mode: Data Sharing Enabled

End Time: 07-22-2025:

Cursor current status:

@Start Time (07-22-2025: 6:22pm KST)

claude-4-sonnet-thinking:

- input (w/ cache write): 4,310,746

- input (w/o cache write): 537,388

- cache read: 26,186,758

- output: 376,728

- total tokens: 31,411,620

- API Cost: $31.79

Total:

- API Cost: $56.71

Cursor Experiment - Git Branch A (Request id: turn3: 7a2eadbb-4fd6-4a61-97de-a083b5ad6e30, turn4: 1331f1b9-4d73-4fbb-bf6b-3a5bea861efd)

Exact same prompt given to Cursor + Claude-4-sonnet and Claude Code

<INSTRUCTIONS_A>

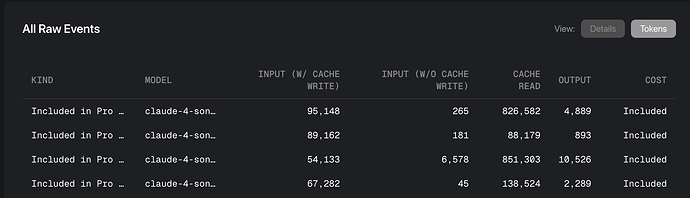

→ Turn 1 cost: claude-4-sonnet-thinking Input (w/ Cache Write): 67,282, Input (w/o Cache Write): 45, Cache Read: 138,524, Output: 2,289, Included

2nd-turn

<INSTRUCTIONS_B>

→ Turn 2 cost: claude-4-sonnet-thinking Input (w/ Cache Write): 54,133, Input (w/o Cache Write): 6,578, Cache Read: 851,303, Output: 10,526, Included

@End time (6:47pm KST)

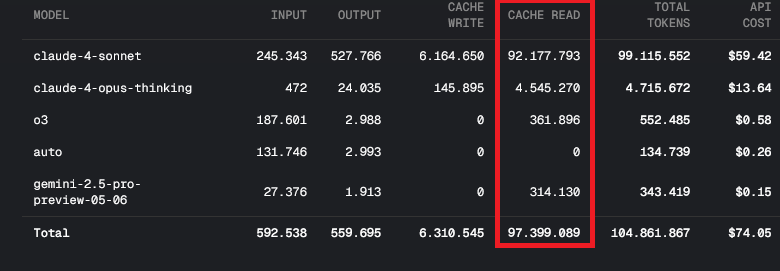

claude-4-sonnet-thinking:

- input (w/ cache write): 4,432,161

- input (w/o cache write): 544,011

- cache read: 27,176,585

- output: 389,543

- totak tokens: 32,542,300

- API Cost: $32.75

Total:

- API Cost: $57.67

Turn 1 & 2 cost in dollars: $57.67-$56.71 = $0.96

There is a bug, doing 3rd turn with Agent Cursor + Claude

3rd-turn

<INSTRUCTIONS_C>

→ Turn 3 cost: claude-4-sonnet-thinking Input (w/ Cache Write): 89,162, Input (w/o Cache Write): 181, Cache Read: 88,179, Output: 893, Included

Turn 3 @End time (6:47pm KST)

claude-4-sonnet-thinking:

- input (w/ cache write): 4,521,323

- input (w/o cache write): 544,192

- cache read: 27,264,764

- output: 390,436

- totak tokens: 32,720,715

- API Cost: $33.13

Total:

- API Cost: $58.05

Request id: 7a2eadbb-4fd6-4a61-97de-a083b5ad6e30

Turn 3 cost in dollars: $58.05-$57.67 = $0.38

Another bug. Must do 4-th turn

<INSTRUCTIONS_D>

→ Turn 4 cost: claude-4-sonnet-thinking Input (w/ Cache Write): 95,148, Input (w/o Cache Write): 265, Cache Read: 826,582, Output: 4,889, Included

Turn 4 @End time (6:59pm KST)

claude-4-sonnet-thinking:

- input (w/ cache write): 4,616,471

- input (w/o cache write): 544,457

- cache read: 28,091,346

- output: 395,325

- totak tokens: 33,647,599

- API Cost: $33.81

Total:

- API Cost: $58.73

Turn 4 cost in dollars: $58.73-$58.05 = $0.68

Cursor Git Branch A total cost: $58.73 - $56.71 = $2.02

Claude Code Experiment - Git Branch B

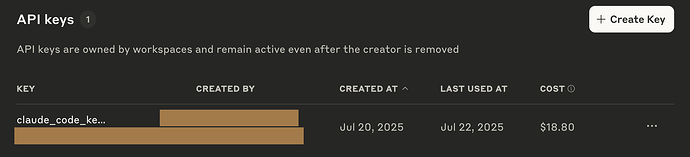

Claude Code API Key

@Start Time

API Key Cost: $18.05

@End Time

API Key Cost: $18.05

Exact same prompt given to Cursor + Claude-4-sonnet and Claude Code

<IDENTICAL_TO_CURSOR_EXPERIMENT_INSTRUCTIONS_A>

2nd-turn for additonal clarification

<IDENTICAL_TO_CURSOR_EXPERIMENT_INSTRUCTIONS_B>

@End Time

API Key Cost: $18.27

Turn 1+2 cost in dollars: $18.27-$18.05 = $0.22

There is a bug, doing 3rd turn with Agent Cursor + Claude

3rd-turn

<SMALL_CLARIFICATION_INSTRUCTION_C>

@End Time (7:18pm)

API Key Cost: $18.80

Turn 3 cost in dollars: $18.80 - $18.27 = $0.53

No need for turn 4 because Claude did the implementation successfully.

Claude Code Git Branch B total cost: $18.80 - $18.05 = $0.75

Conclusion

- Cursor Experiment - Git Branch A

- (Request id: turn3:

7a2eadbb-4fd6-4a61-97de-a083b5ad6e30, turn4: 1331f1b9-4d73-4fbb-bf6b-3a5bea861efd)

Total cost: $58.73 - $56.71 = $2.02

- Claude Experiment - Git Branch B

Total cost: $18.80 - $18.05 = $0.75

The same exact feature, with same chat instructons, same files attached in context to reach completion cost $2.02 in Cursor, and $0.75 with Claude Code in API Key.

It’s a 260 % difference.

In both case, I tested the code in the iOS simulator and it has nearly identical UI, and the same functionality.

@T1000

I hope this is sufficient. I’m almost at my account limit with Pro+ subscription and cannot do any more experiments for a while